5,424 reads

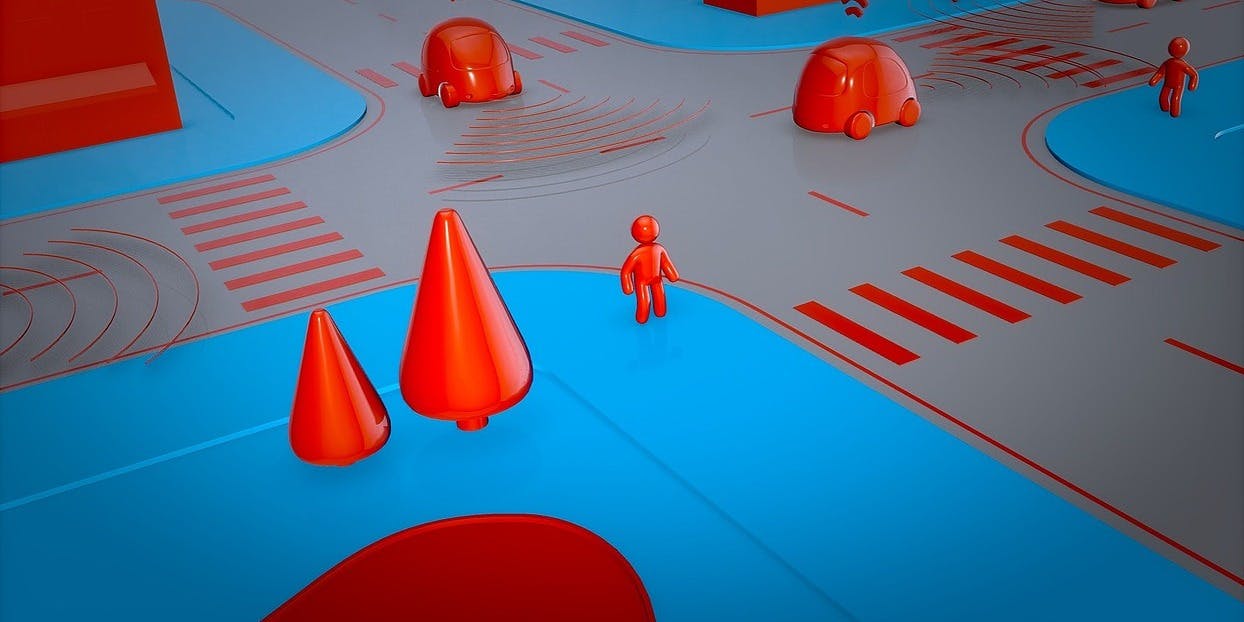

Image Annotation Types For Computer Vision And Its Use Cases

by

September 7th, 2019

Audio Presented by

Machine Learning, Computer Vision, and Artificial Intelligence Enthusiast.

About Author

Machine Learning, Computer Vision, and Artificial Intelligence Enthusiast.