203 reads

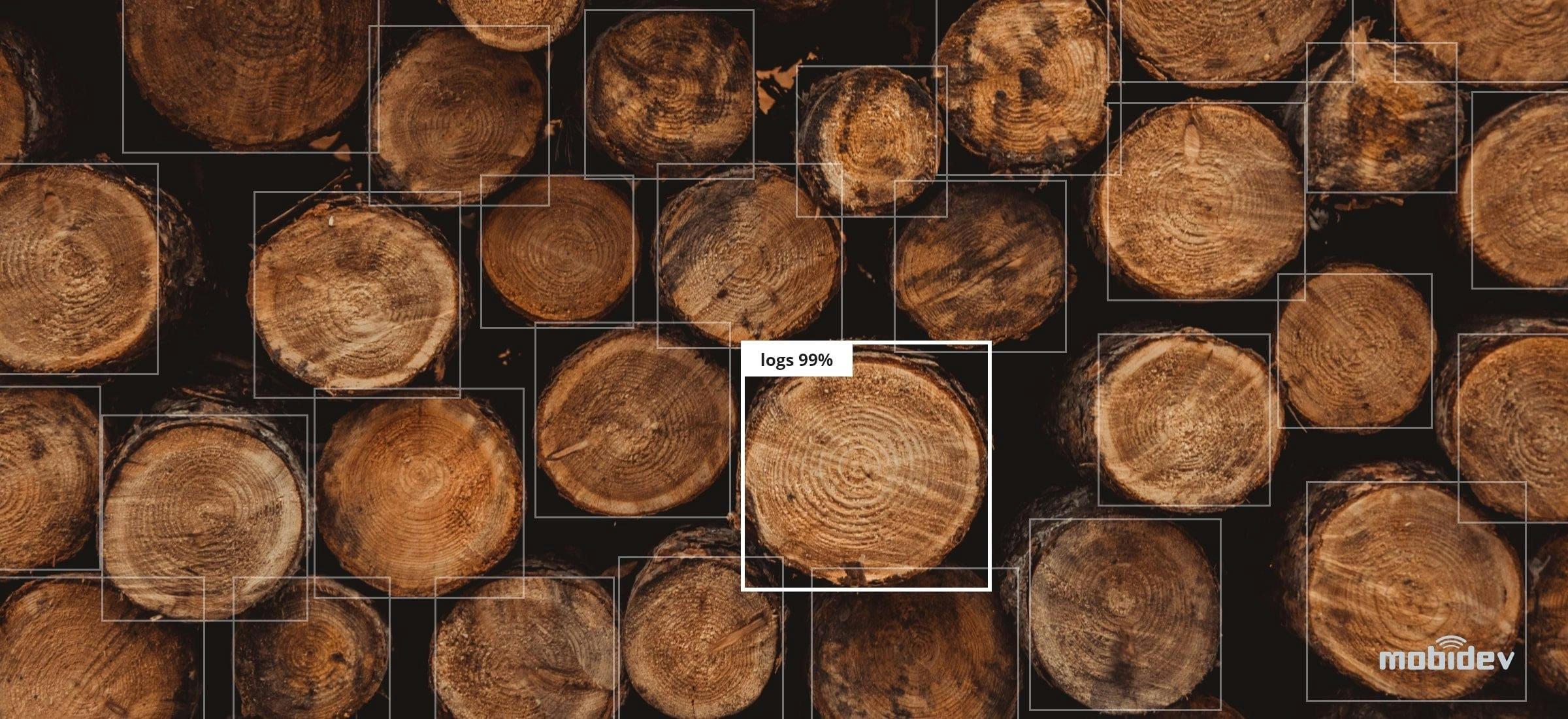

How Much Data is Enough for Small Dataset-Based Object Detection?

by

September 21st, 2021

Audio Presented by

Trusted software development company since 2009. Custom DS/ML, AR, IoT solutions https://mobidev.biz

About Author

Trusted software development company since 2009. Custom DS/ML, AR, IoT solutions https://mobidev.biz