1,145 reads

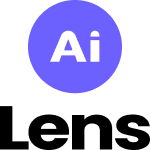

Where Can Dreams Take You? The Art of Teaching Associative Thinking to Machines

by

May 10th, 2020

About Author

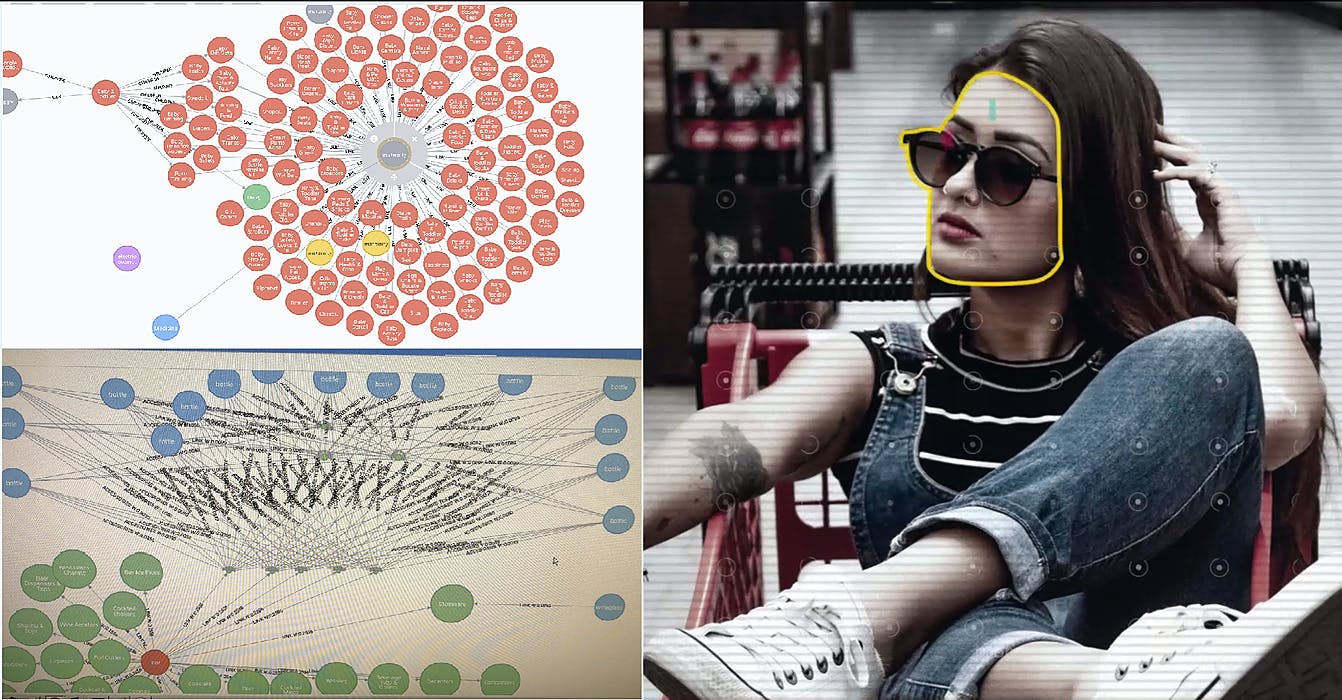

AI-powered contextual computer vision ad platform that monetizes any visual content

Comments

TOPICS

Related Stories

5 Best AI Articles of the Month

Jun 06, 2022