43,450 reads

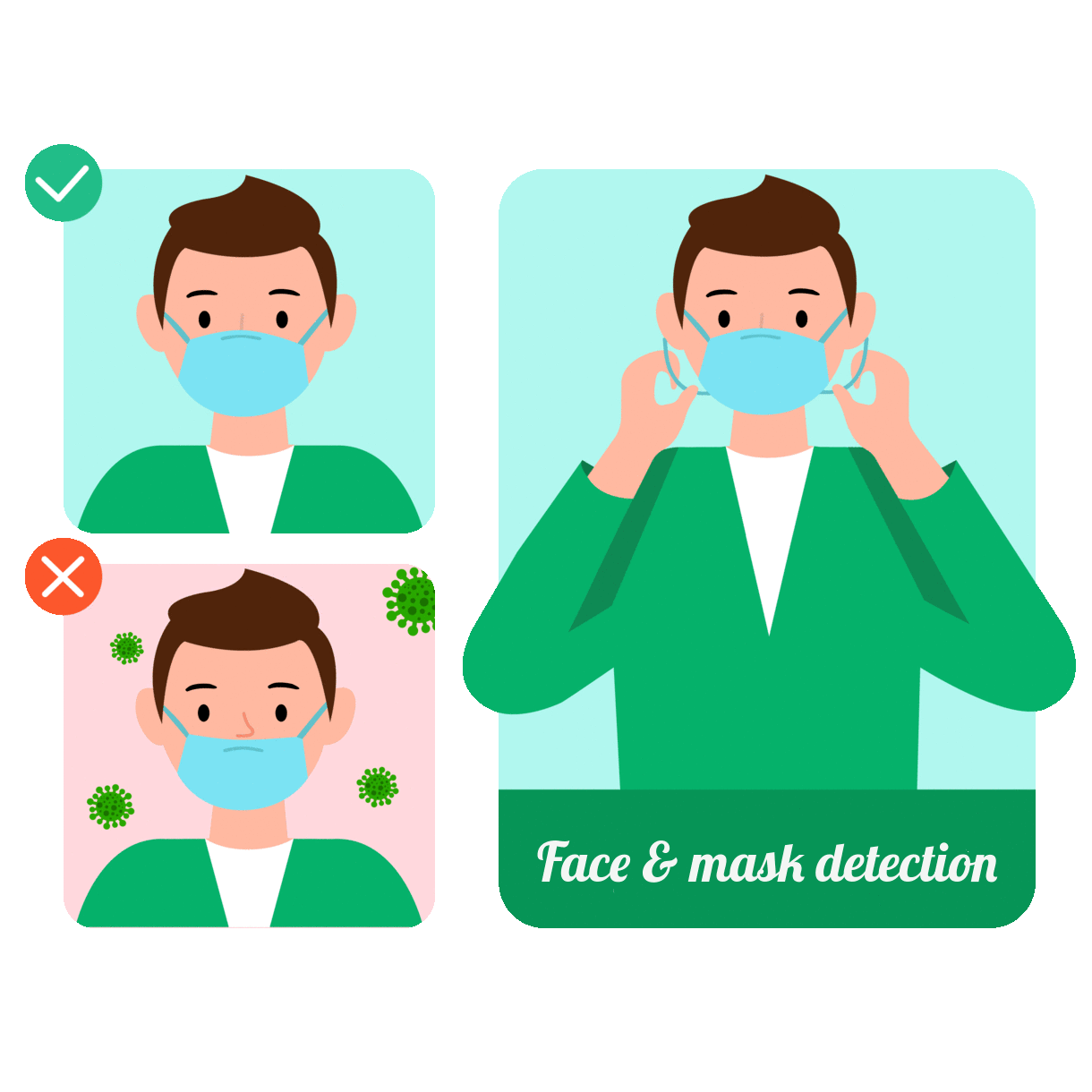

We Built a Face and Mask Detection Web App for Google Chrome

by

March 7th, 2021

Audio Presented by

Skilled Front-End engineer with 7 years of experience in developing Web and SmartTV applications

About Author

Skilled Front-End engineer with 7 years of experience in developing Web and SmartTV applications