5,379 reads

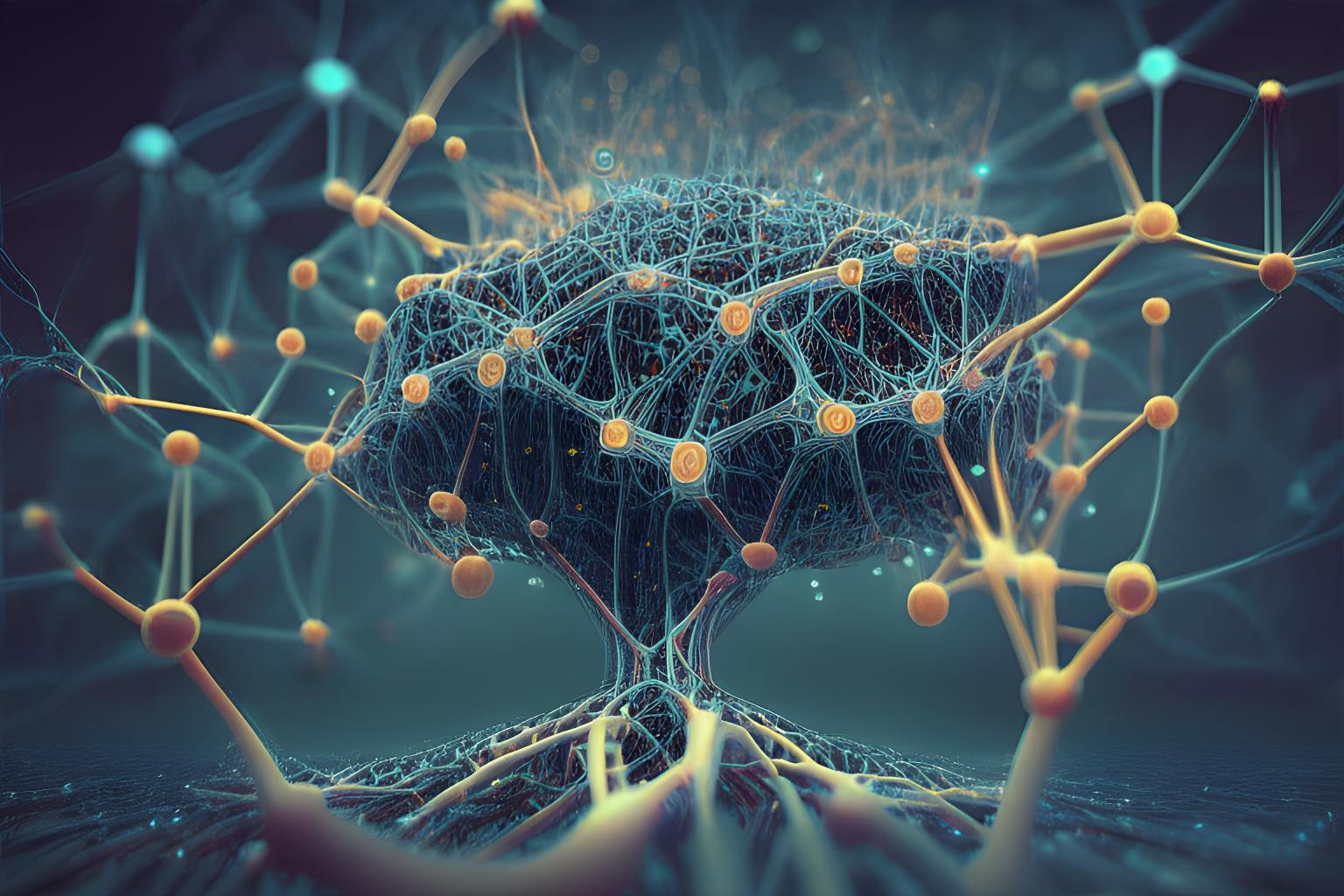

Neural Network Layers: All You Need Is Inside Comprehensive Overview

by

July 17th, 2023

Audio Presented by

Data Science expert with desire to help companies advance by applying AI for process improvements.

Story's Credibility

About Author

Data Science expert with desire to help companies advance by applying AI for process improvements.