2,239 reads

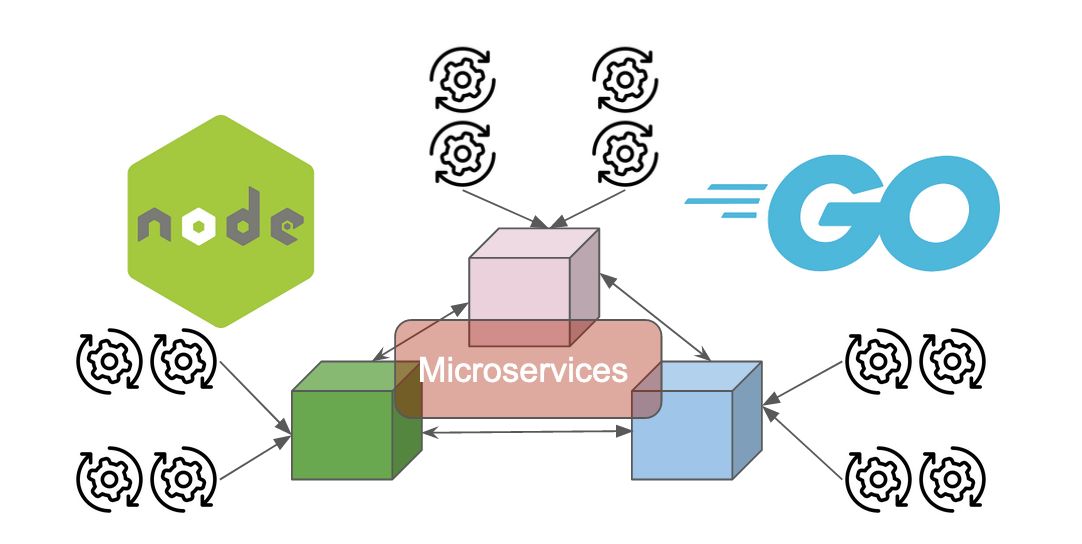

Microservices Deserve Modern Programming Platforms: Java May Not be the Best Option

by

October 1st, 2020

Passionate for SW and what happens around it. Views and thoughts here are my own.

About Author

Passionate for SW and what happens around it. Views and thoughts here are my own.