801 reads

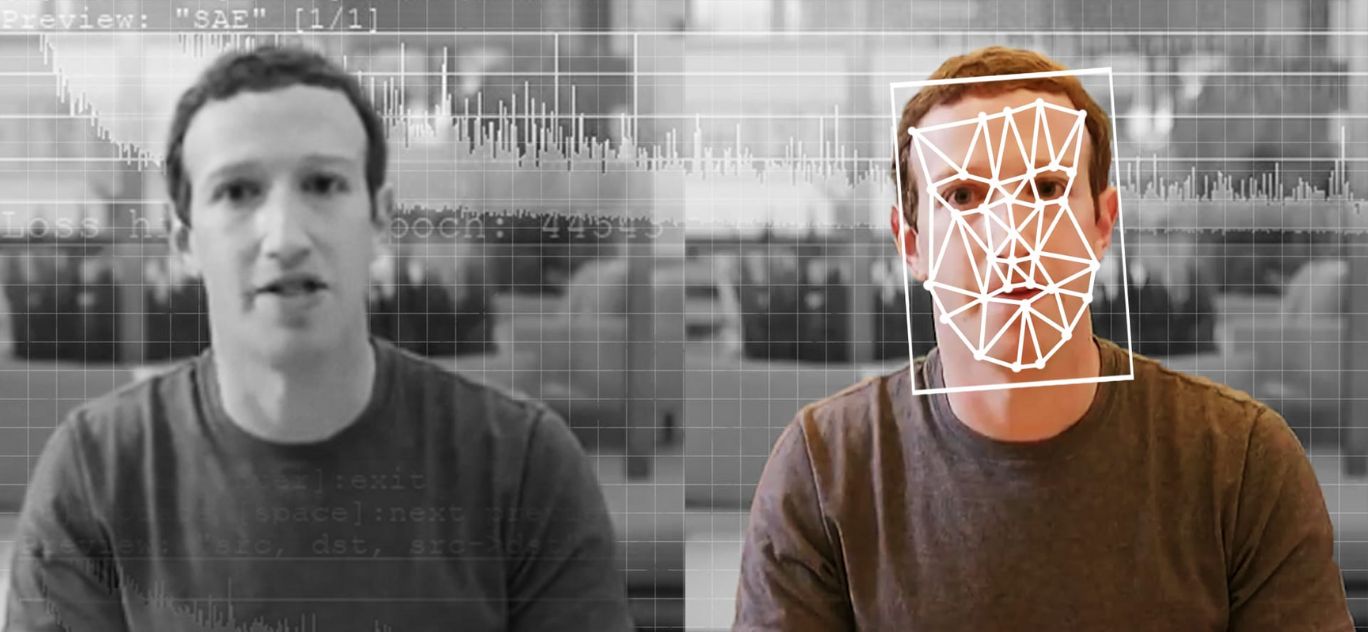

Methods and Plugins to Spot Deepfakes and AI-Generated Text

by

August 17th, 2020

Director of Content @ISNation & HackerNoon's Editorial Ambassador by day, VR Gamer and Anime Binger by night.

About Author

Director of Content @ISNation & HackerNoon's Editorial Ambassador by day, VR Gamer and Anime Binger by night.