8,471 reads

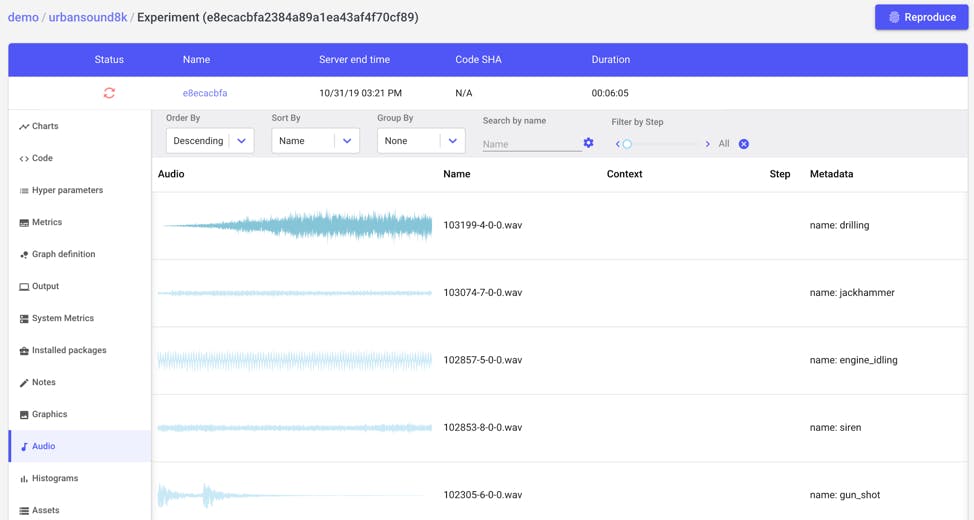

How To Apply Machine Learning And Deep Learning Methods to Audio Analysis

by

November 18th, 2019

Audio Presented by

Allowing data scientists and teams the ability to track, compare, explain, reproduce ML experiments.

About Author

Allowing data scientists and teams the ability to track, compare, explain, reproduce ML experiments.