218 reads

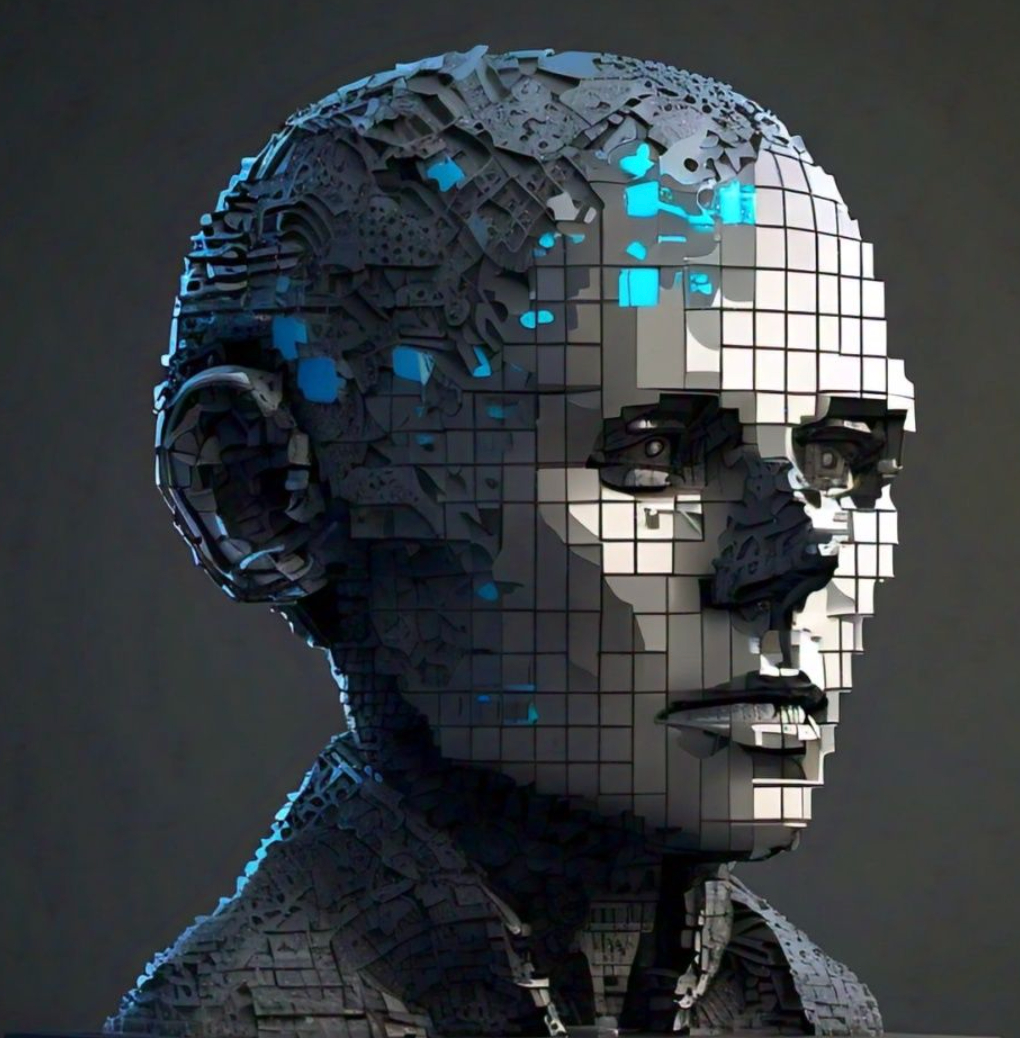

Formal Definition of the Conscious Turing Machine Robot

by

September 3rd, 2024

Audio Presented by

AIthics illuminates the path forward, fostering responsible AI innovation, transparency, and accountability.

Story's Credibility

About Author

AIthics illuminates the path forward, fostering responsible AI innovation, transparency, and accountability.