892 reads

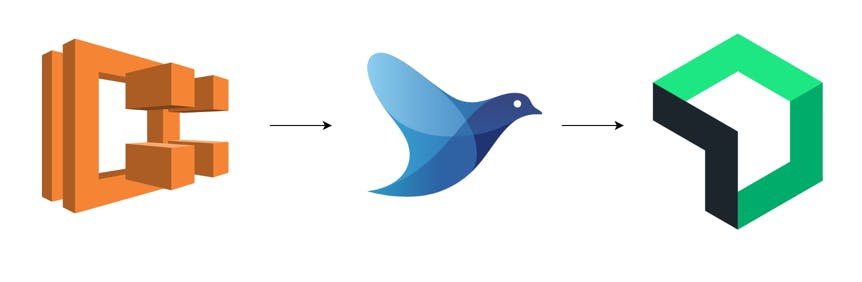

Centralized Logging for AWS ECS in New Relic using FluentBit

by

July 13th, 2024

Audio Presented by

Skilled Software Engineer with more than 10 years of experience in backend developing

Story's Credibility

About Author

Skilled Software Engineer with more than 10 years of experience in backend developing