687 reads

AI Will Put Programmers out of Work in 2025

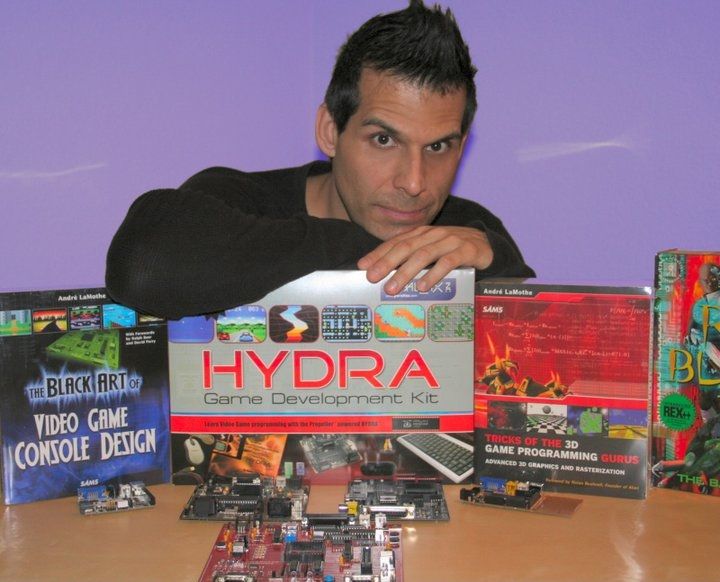

by

November 7th, 2023

Audio Presented by

Computer Scientist, Electrical Engineer, Game Developer, Author, CEO, and Girl Dad.

Story's Credibility

About Author

Computer Scientist, Electrical Engineer, Game Developer, Author, CEO, and Girl Dad.