1,954 reads

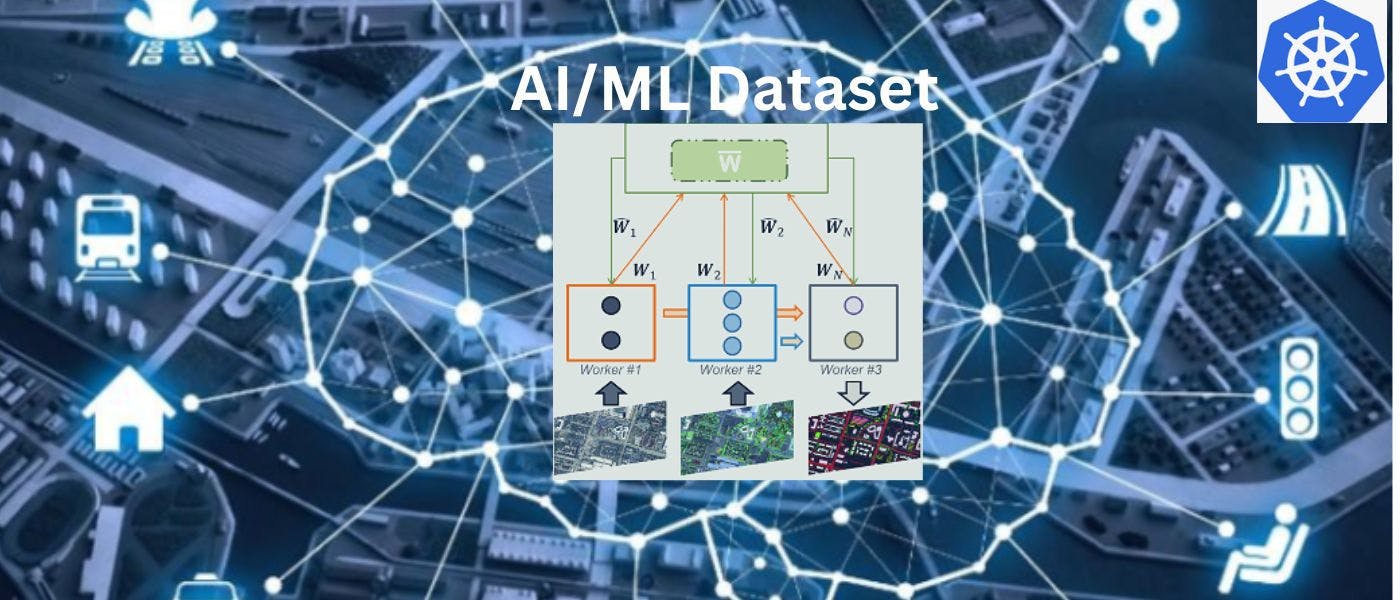

Why Use Kubernetes for Distributed Inferences on Large AI/ML Datasets

by

October 16th, 2022

Audio Presented by

Priya: 10 yrs. of exp. in research & content creation, spirituality & data enthusiast, diligent business problem-solver.

About Author

Priya: 10 yrs. of exp. in research & content creation, spirituality & data enthusiast, diligent business problem-solver.