532 reads

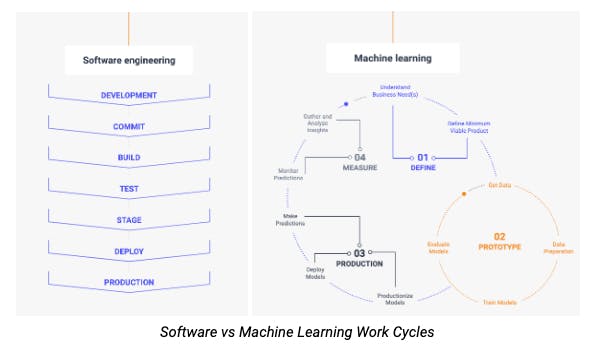

Why Software Engineering Processes and Tools Don't Work for Machine Learning

by

December 5th, 2019

Allowing data scientists and teams the ability to track, compare, explain, reproduce ML experiments.

About Author

Allowing data scientists and teams the ability to track, compare, explain, reproduce ML experiments.