1,696 reads

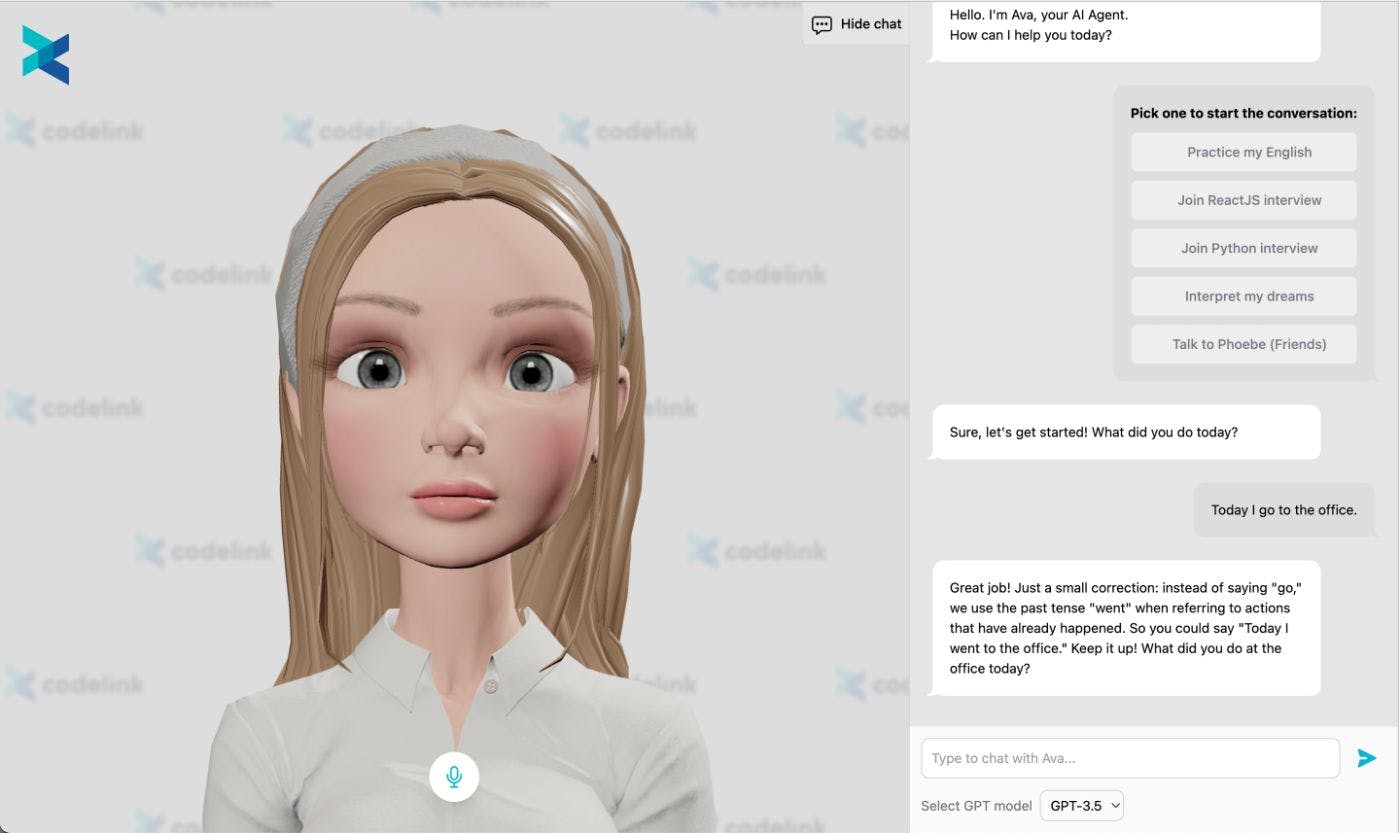

We Created a Simulated Video Call Demo with ChatGPT

by

July 6th, 2023

Audio Presented by

We provide high-quality professionals to help clients build and release highly impacting products.

Story's Credibility

About Author

We provide high-quality professionals to help clients build and release highly impacting products.