589 reads

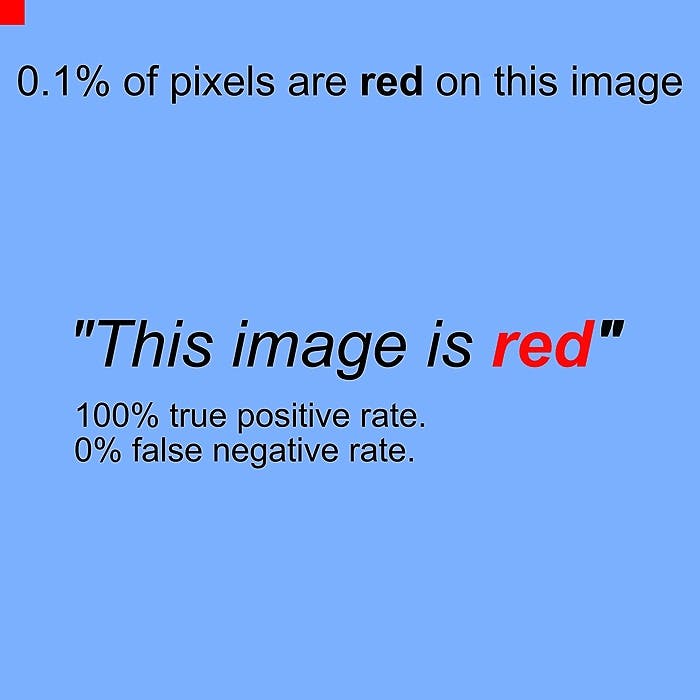

This Image is Red

by

September 26th, 2019

CTO & Co-founder at @cornis_SAS / math nerd of @podcastscience / improvisation at @watchimprov

About Author

CTO & Co-founder at @cornis_SAS / math nerd of @podcastscience / improvisation at @watchimprov