317 reads

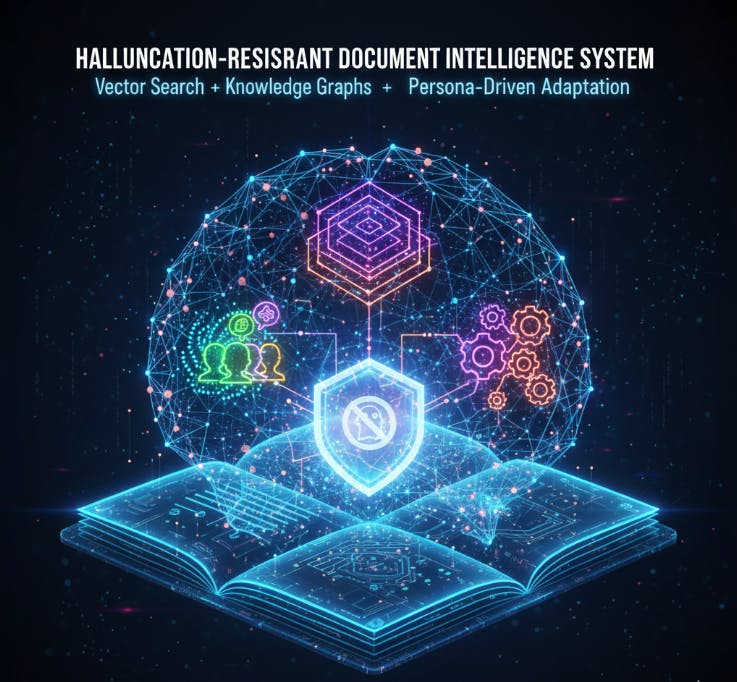

Stop Hallucinations at the Source: Hybrid RAG That Checks Itself

by

October 6th, 2025

Audio Presented by

Building tomorrow's software today. AI-powered mobile apps • Carrier-grade infrastructure • Open source security • Hack

Story's Credibility

About Author

Building tomorrow's software today. AI-powered mobile apps • Carrier-grade infrastructure • Open source security • Hack