1,270 reads

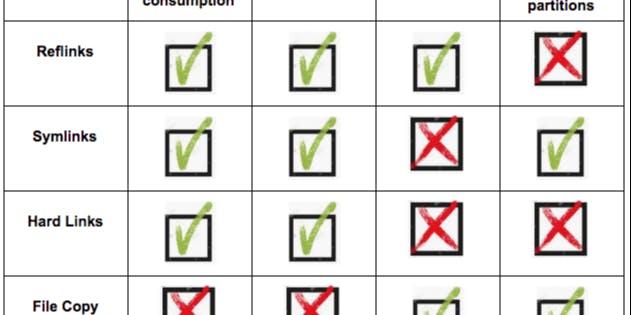

Reflinks vs symlinks vs hard links, and how they can help machine learning projects

by

August 17th, 2019

A writer and software engineer based in Silicon Valley, focused on machine learning, EV's, Node.js.

About Author

A writer and software engineer based in Silicon Valley, focused on machine learning, EV's, Node.js.