Audio Presented by

I am a senior full-stack developer. I love to create things for people to use! Founder of https://recalllab.com

Story's Credibility

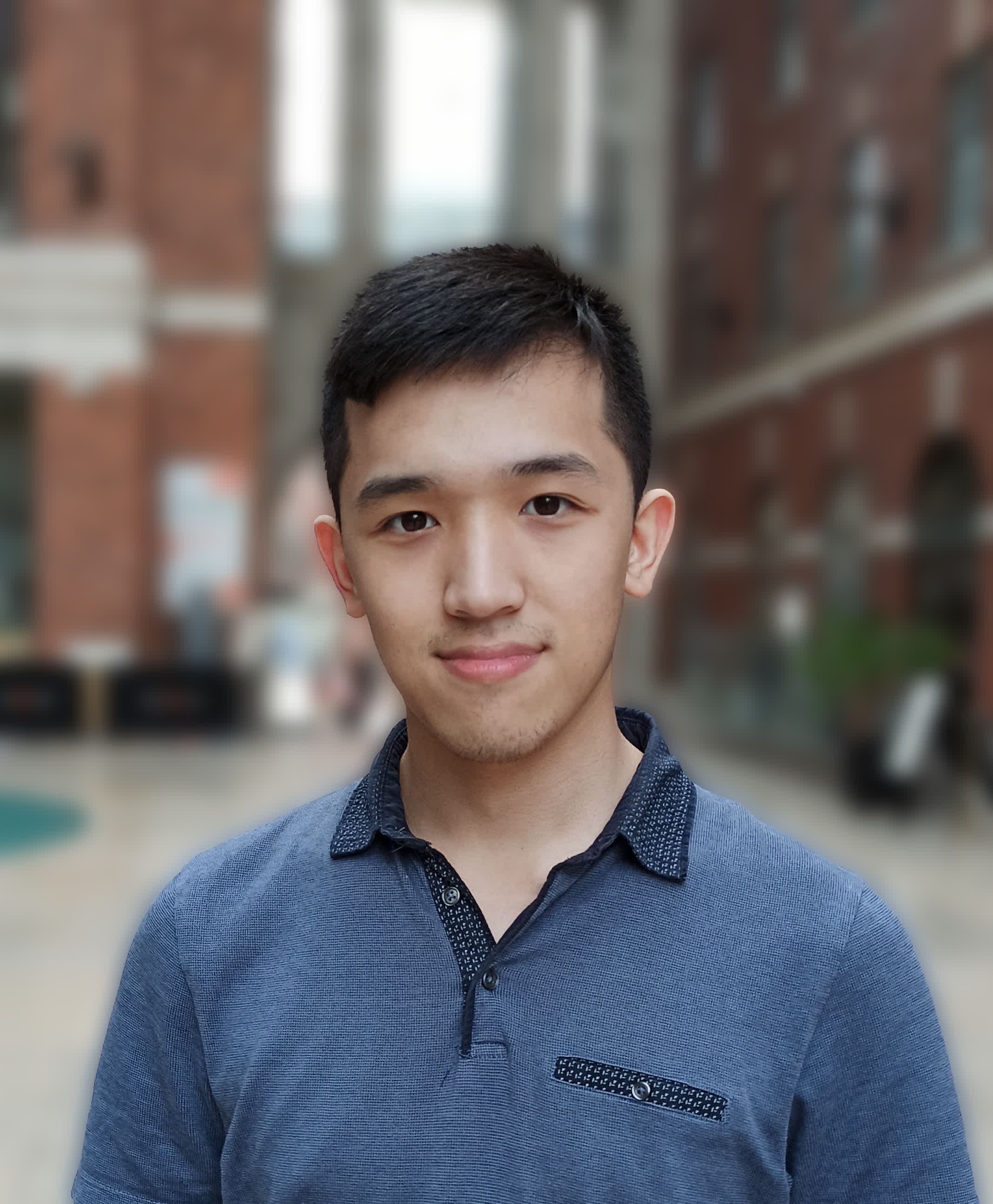

About Author

I am a senior full-stack developer. I love to create things for people to use! Founder of https://recalllab.com