692 reads

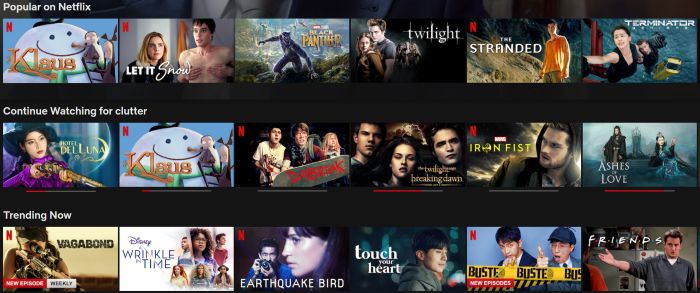

Content-Based Recommender Using Natural Language Processing (NLP)

by

August 11th, 2020

perpetual student | fitness enthusiast | passionate explorer | https://github.com/jnyh

About Author

perpetual student | fitness enthusiast | passionate explorer | https://github.com/jnyh