294 reads

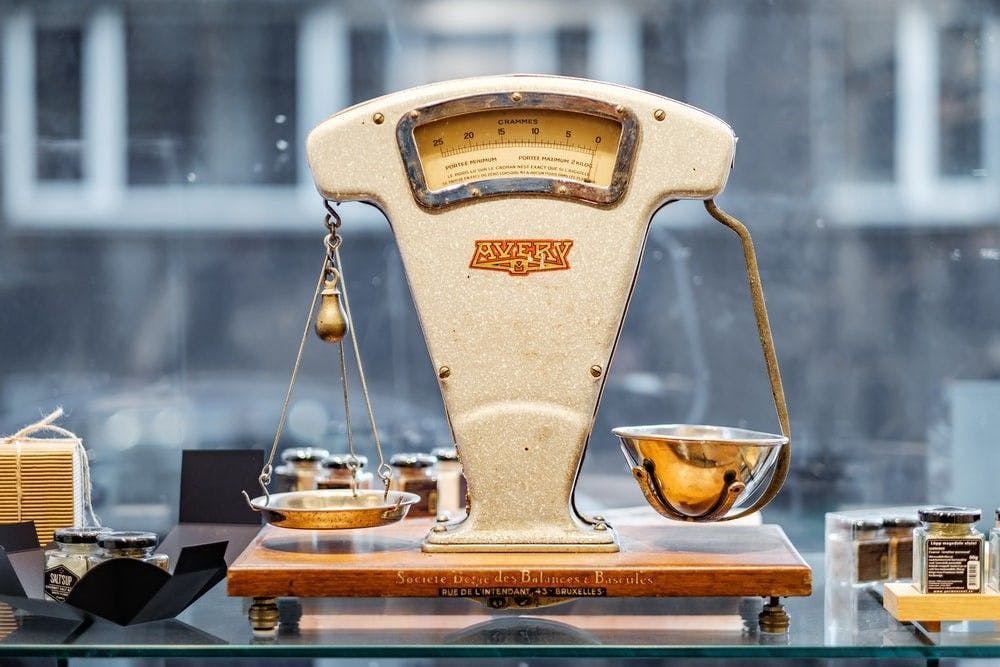

An Overview of Load Balancing Challenges

by

June 19th, 2021

Audio Presented by

A software engineer desire to realize the imaginations of future life through technology.

About Author

A software engineer desire to realize the imaginations of future life through technology.