130 reads

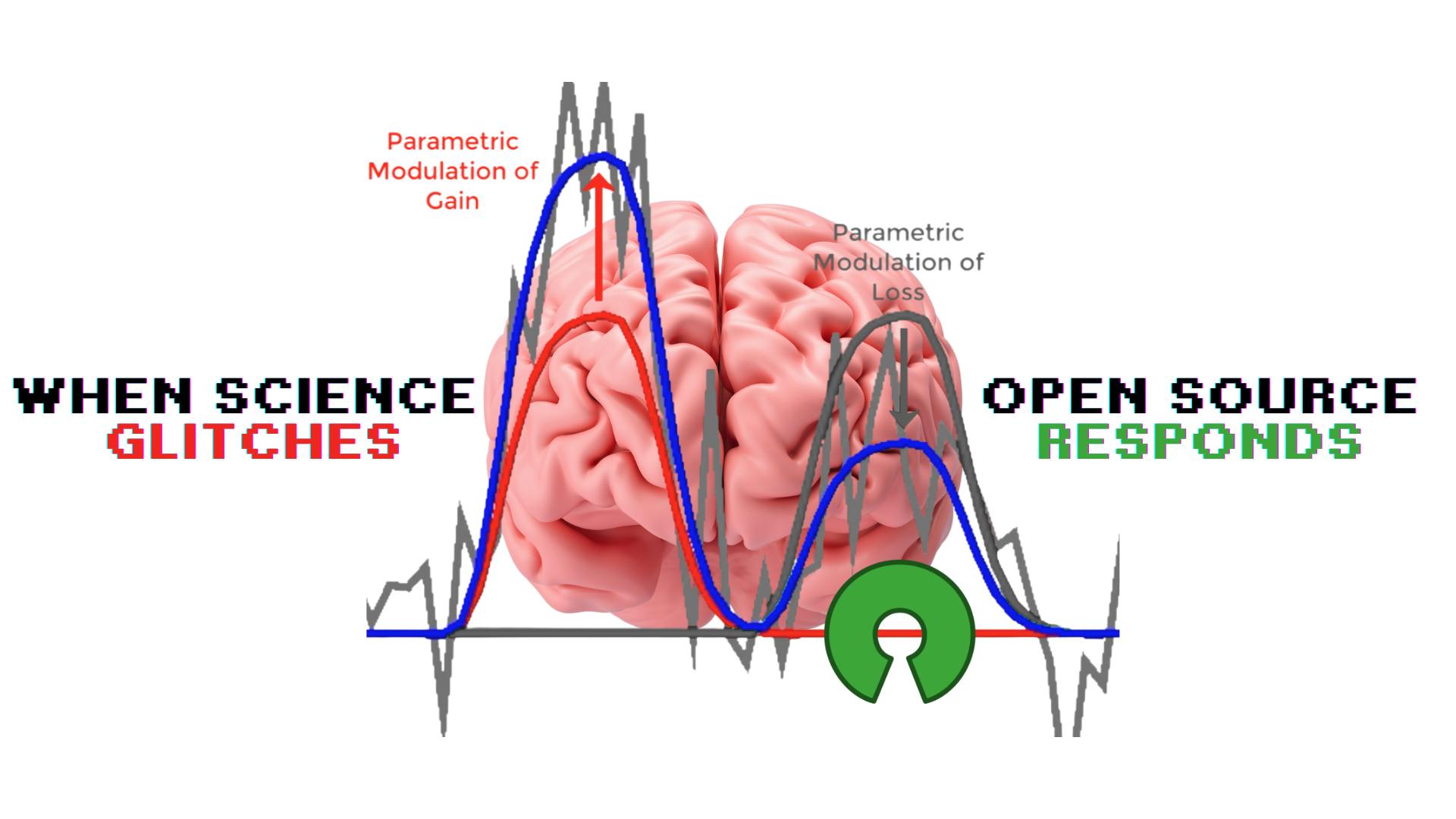

The Bug, The Black Box, and the Brain Map: What AFNI vs. SPM Taught Us About Open Source & Science

by

July 14th, 2025

Audio Presented by

Focused on the open source software supply chain to build a better digital future for all of us.

Story's Credibility

About Author

Focused on the open source software supply chain to build a better digital future for all of us.