256 reads

Security in Generative AI Infrastructure Is of Critical Importance

by

November 16th, 2024

Audio Presented by

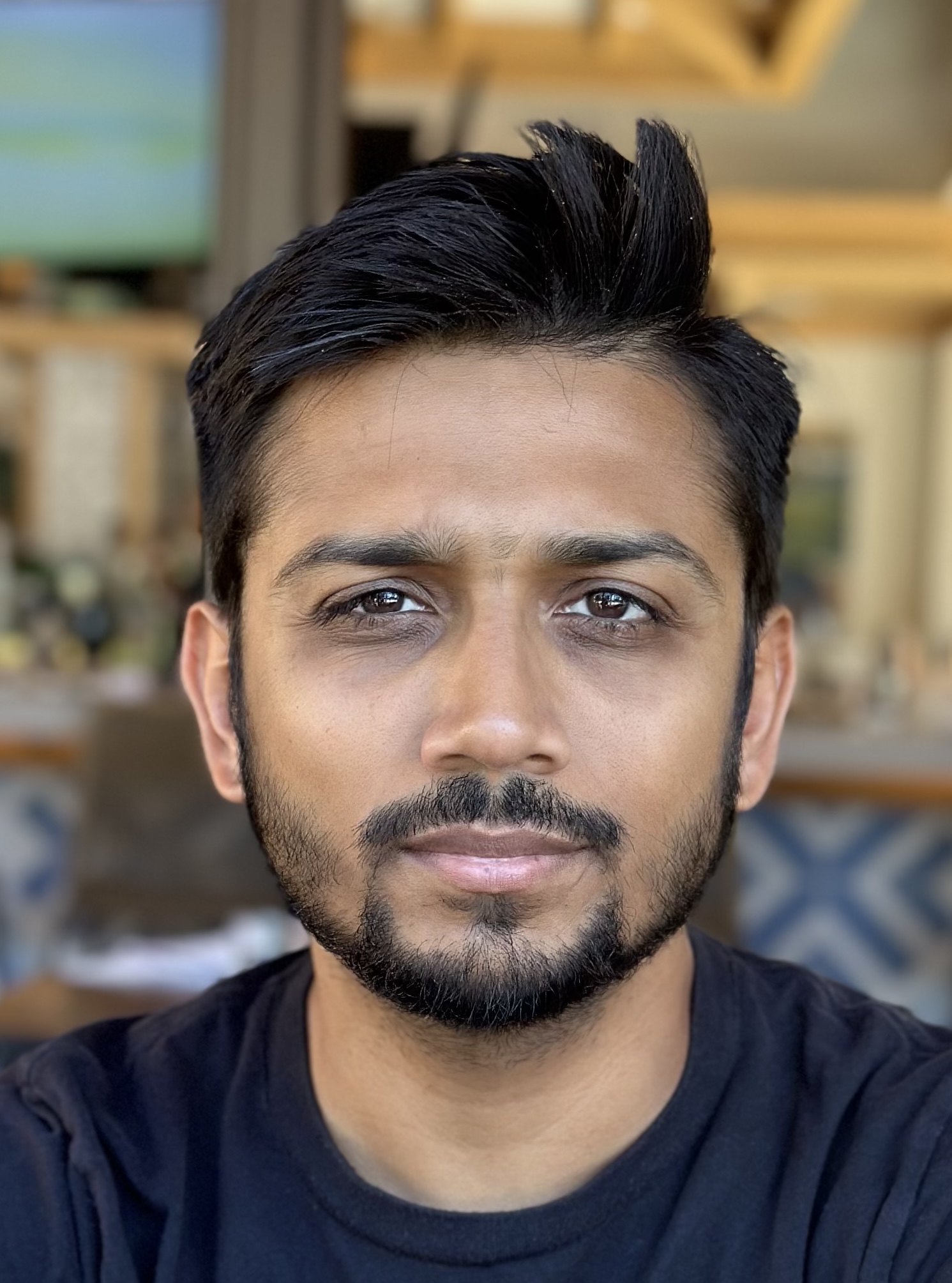

Interested in Performance and Security in Highly Available and Scalable Systems. Currently at Meta

Story's Credibility

About Author

Interested in Performance and Security in Highly Available and Scalable Systems. Currently at Meta