307 reads

Building a Secure Future: the Ethical Imperative of Prioritizing Security in Digital Architecture

by

August 1st, 2024

Audio Presented by

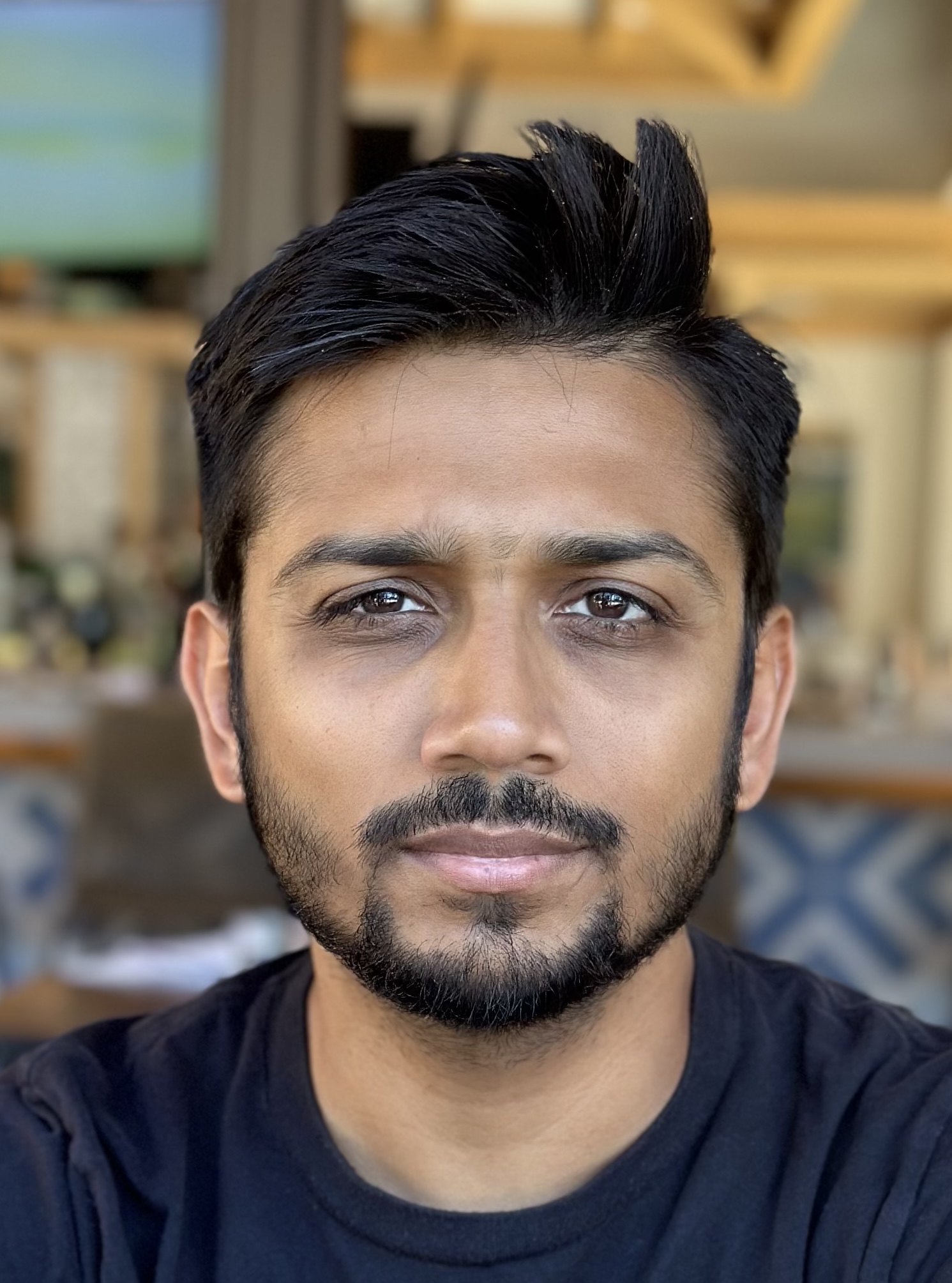

Interested in Performance and Security in Highly Available and Scalable Systems. Currently at Meta

Story's Credibility

About Author

Interested in Performance and Security in Highly Available and Scalable Systems. Currently at Meta