348 reads

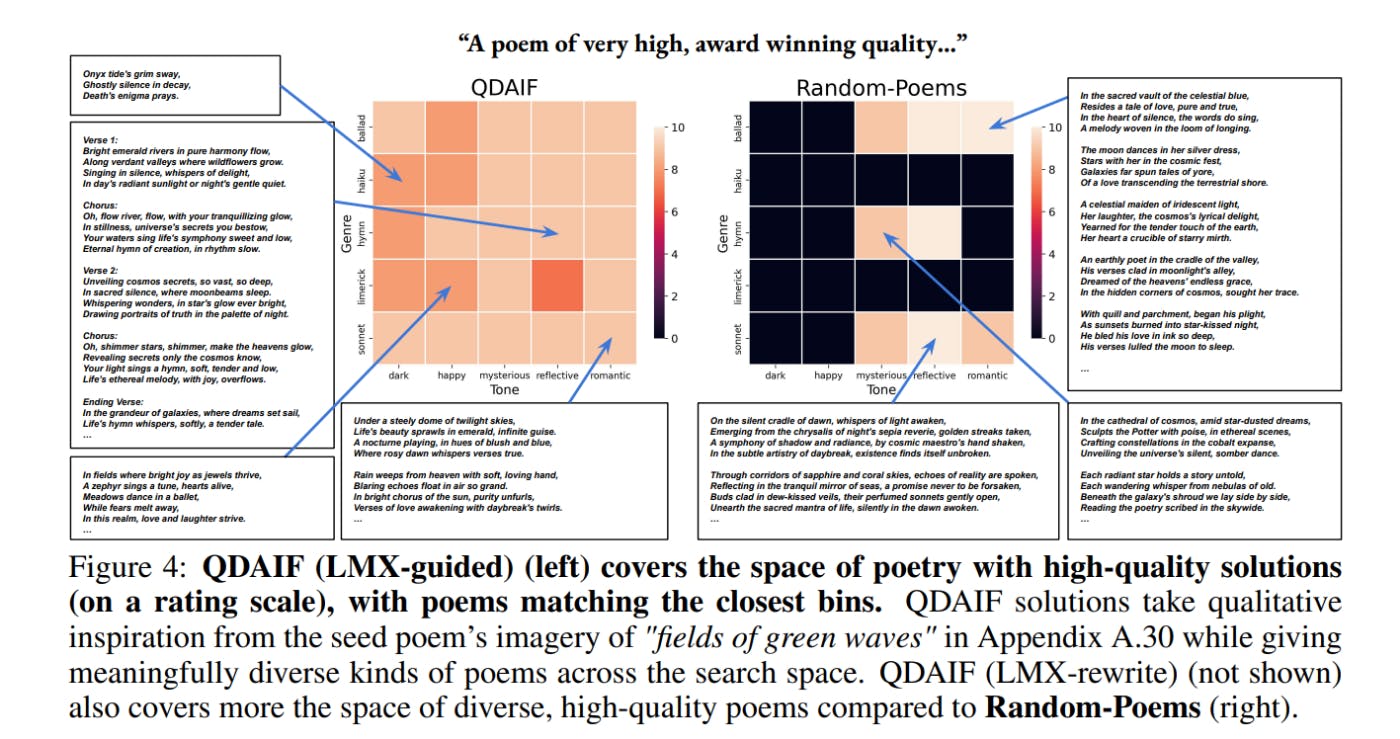

Revolutionizing Creative Text Generation with Quality-Diversity through AI Feedback

by

January 26th, 2024

Audio Presented by

The FeedbackLoop offers premium product management education, research papers, and certifications. Start building today!

About Author

The FeedbackLoop offers premium product management education, research papers, and certifications. Start building today!