501 reads

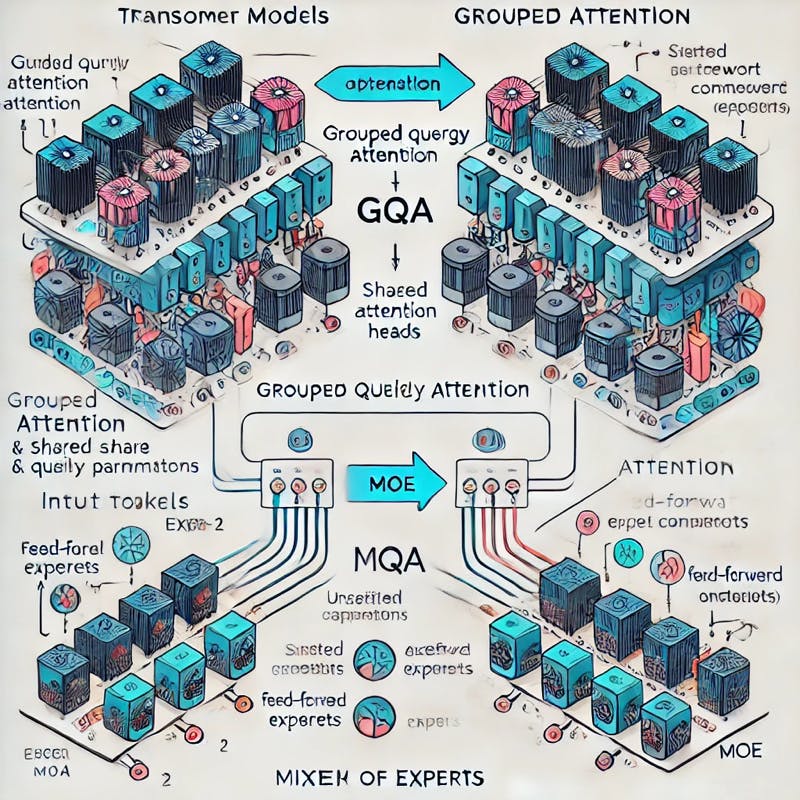

Primer on Large Language Model (LLM) Inference Optimizations: 3. Model Architecture Optimizations

by

November 17th, 2024

Audio Presented by

Machine Learning Engineer focused on building AI-driven recommendation systems and exploring AI safety.

About Author

Machine Learning Engineer focused on building AI-driven recommendation systems and exploring AI safety.