666 reads

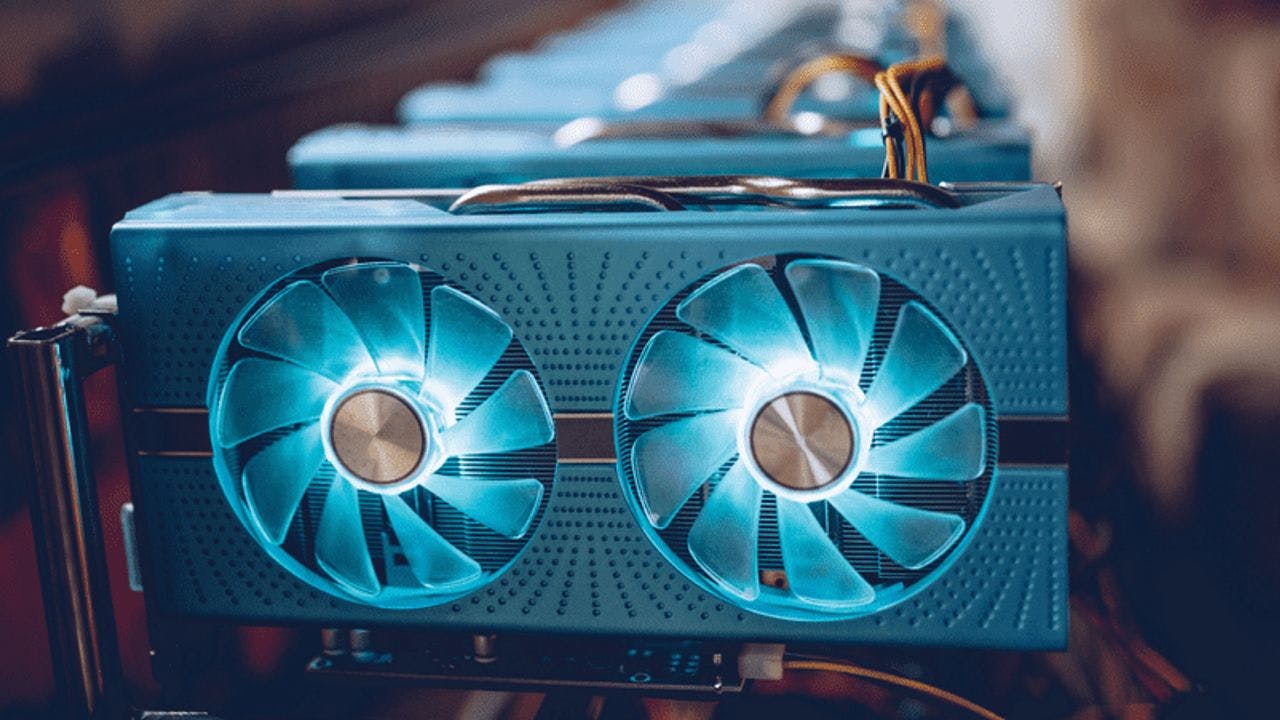

How to Prioritize AI Projects Amidst GPU Constraints

by

August 6th, 2023

Audio Presented by

Tech advisor & strategist to Fortune 500 executives; featured speaker and author

About Author

Tech advisor & strategist to Fortune 500 executives; featured speaker and author