191 reads

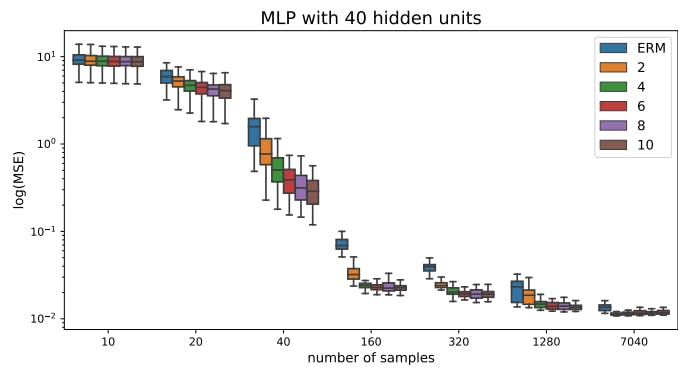

How to Implement ADA for Data Augmentation in Nonlinear Regression Models

by

November 14th, 2024

Audio Presented by

Anchoring provides a steady start, grounding decisions and perspectives in clarity and confidence.

Story's Credibility

About Author

Anchoring provides a steady start, grounding decisions and perspectives in clarity and confidence.