1,562 reads

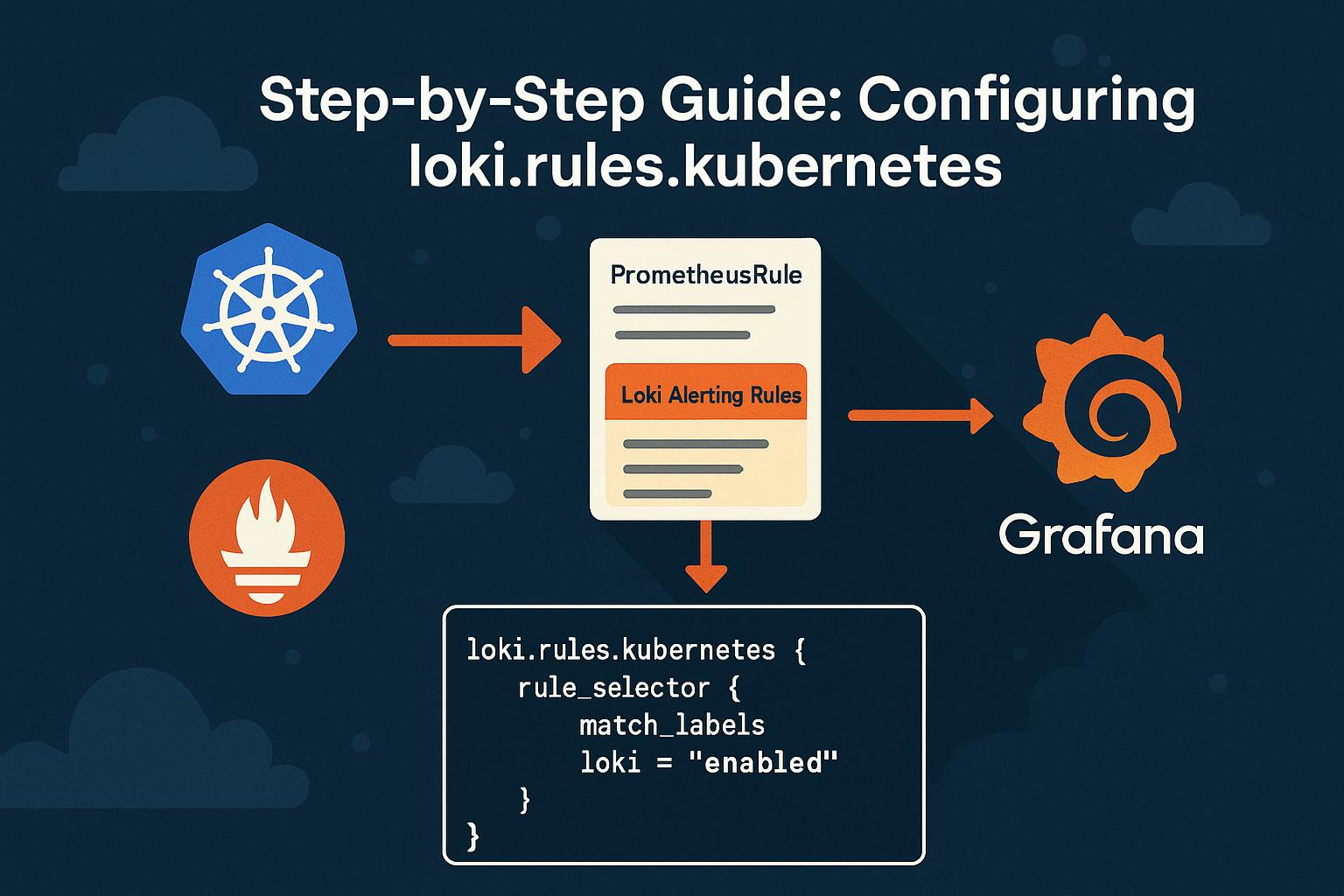

How to Create Loki Alerts Via PrometheusRule Resource

by

July 9th, 2025

Audio Presented by

Story's Credibility

About Author

Lead DevOps Engineer, Co-Founder of ReferrsMe & CrowdFind

Comments

TOPICS

Related Stories

Alertable Errors in Swift ⛔️

Nov 14, 2017

Alertable Errors in Swift ⛔️

Nov 14, 2017