525 reads

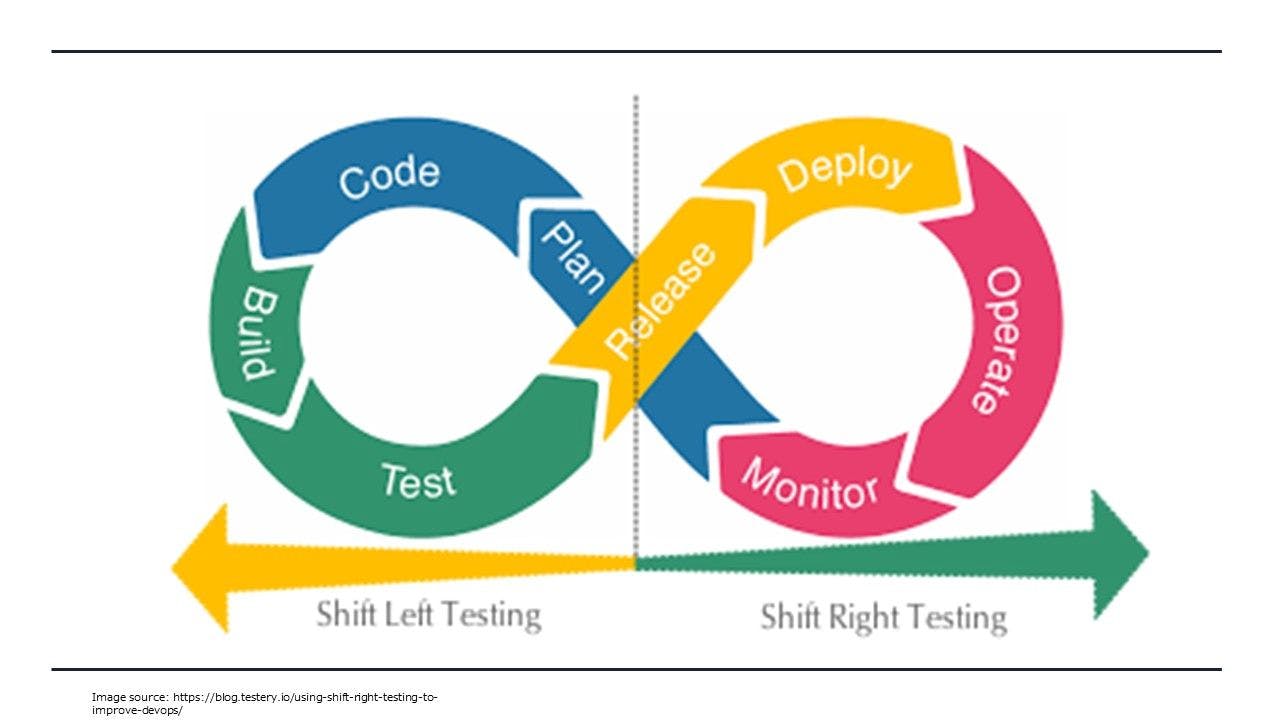

How Shift-Right Testing Can Build Product Resiliency

by

September 15th, 2021

Rohan has 10+ years of experience building large scale distributed systems, developer frameworks and bigdata platforms.

About Author

Rohan has 10+ years of experience building large scale distributed systems, developer frameworks and bigdata platforms.