739 reads

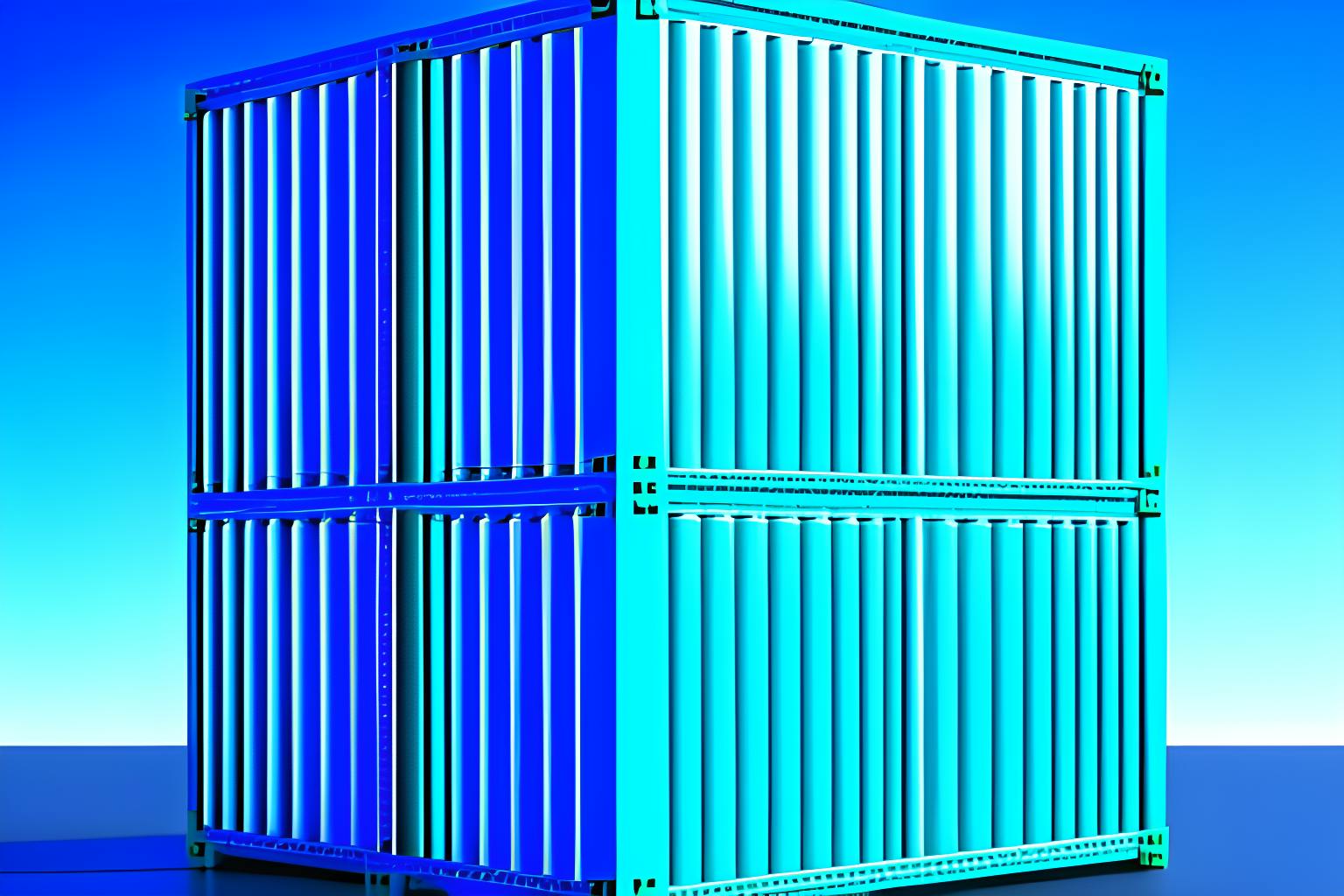

Docker for Beginners: Containerizing a Nextjs Application

by

July 30th, 2023

Audio Presented by

Story's Credibility

About Author

Software Engineer, Building syntackle.com

Comments

TOPICS

THIS ARTICLE WAS FEATURED IN

Related Stories

3 Top Tools for Implementing Kubernetes Observability

@ruchitavarma

Aug 09, 2022