543 reads

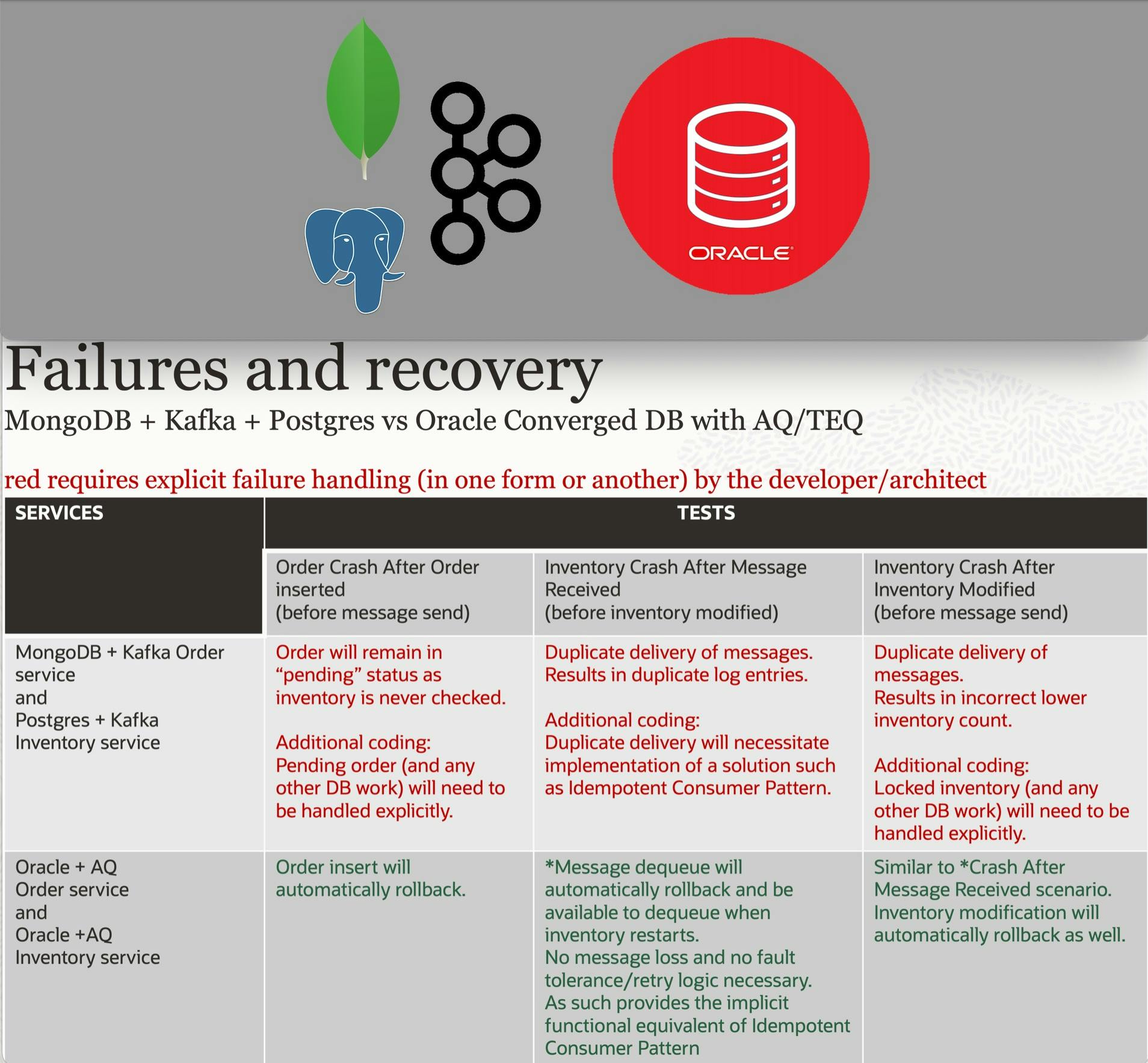

Comparing Apache Kafka with Oracle Transactional Event Queues (TEQ) as Microservices Event Mesh

by

September 13th, 2021

Architect and Developer Advocate, Microservices with Oracle Database. XR Developer

About Author

Architect and Developer Advocate, Microservices with Oracle Database. XR Developer