246 reads

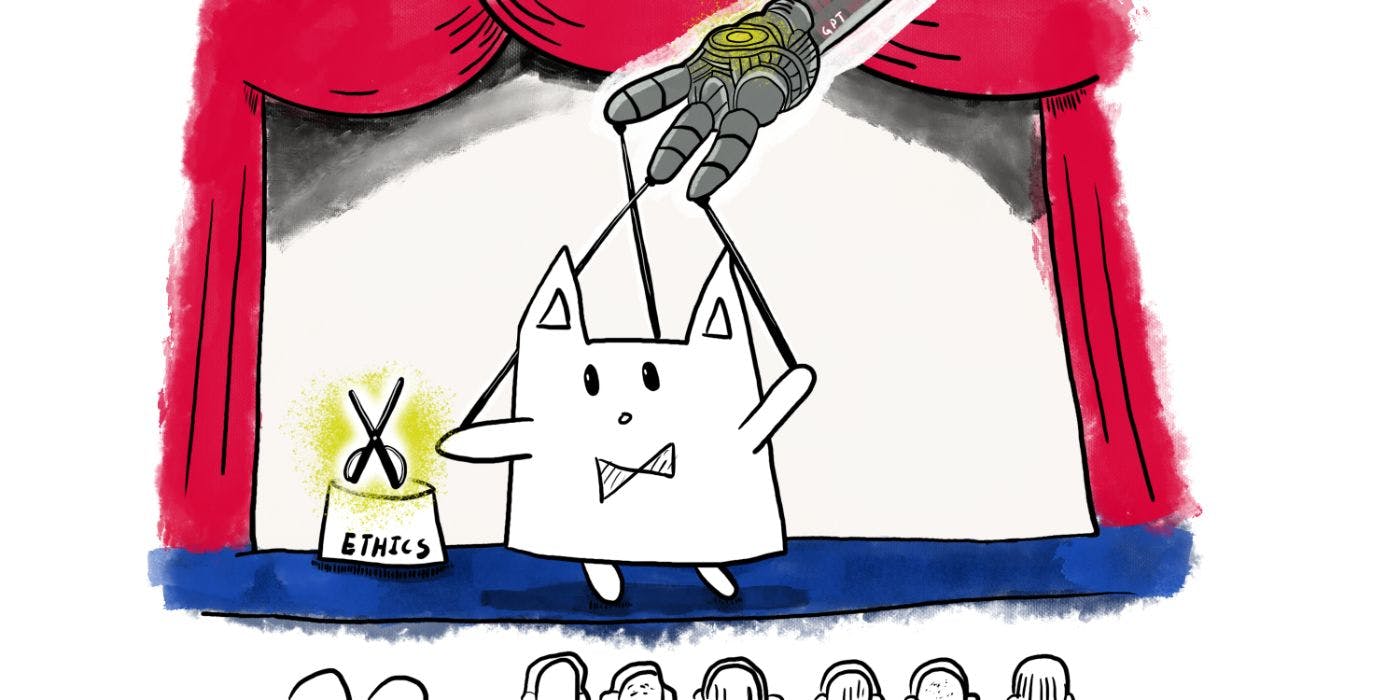

ChatGPT for Office Politics: When is it Ethical?

by

February 16th, 2023

Audio Presented by

Physician turned product manager writing about all things medicine, business and technology.

About Author

Physician turned product manager writing about all things medicine, business and technology.