443 reads

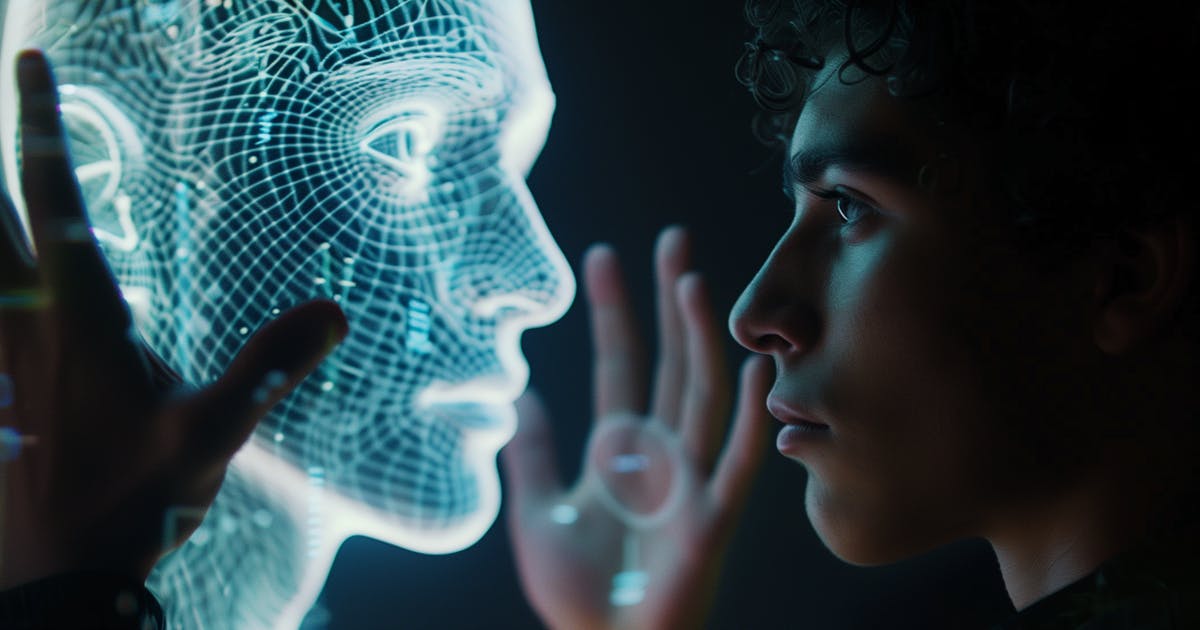

Can You Trust Your AI Twin to Think Like You?

by

November 23rd, 2024

Audio Presented by

Hi, I'm Giorgio Fazio, a creative director blending art and technology. Online since 1995.

About Author

Hi, I'm Giorgio Fazio, a creative director blending art and technology. Online since 1995.