663 reads

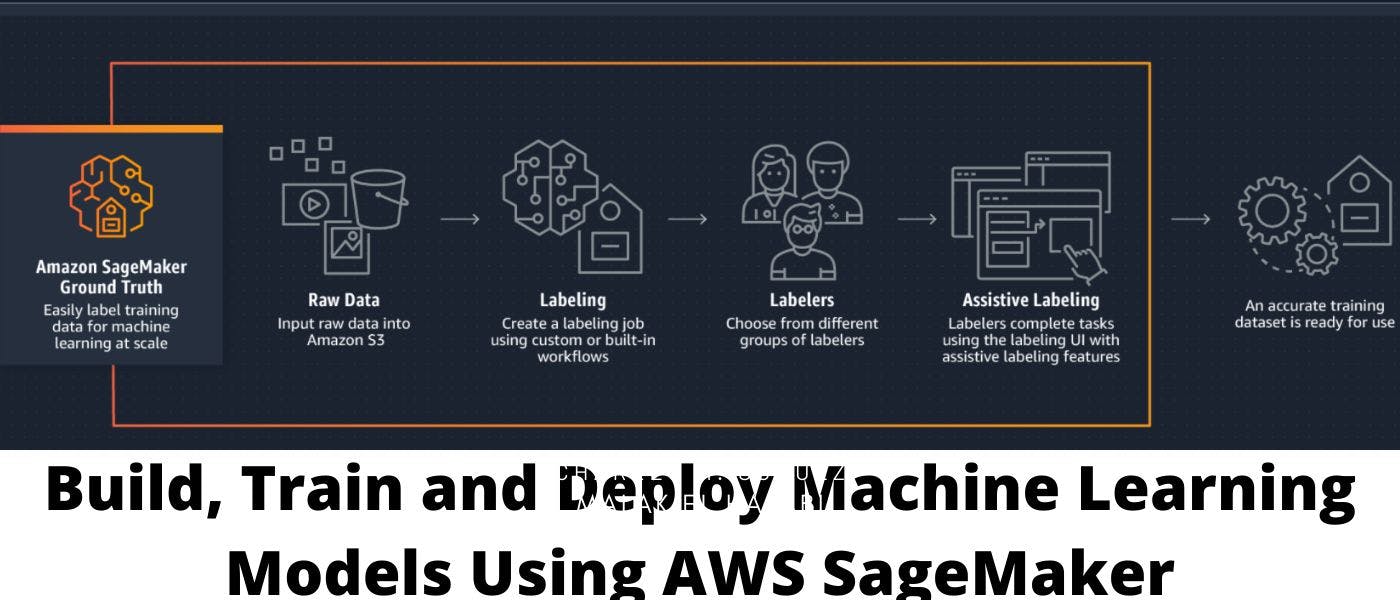

Building Machine Learning Models Using AWS SageMaker

by

November 4th, 2021

Audio Presented by

Priya: 10 yrs. of exp. in research & content creation, spirituality & data enthusiast, diligent business problem-solver.

About Author

Priya: 10 yrs. of exp. in research & content creation, spirituality & data enthusiast, diligent business problem-solver.