588 reads

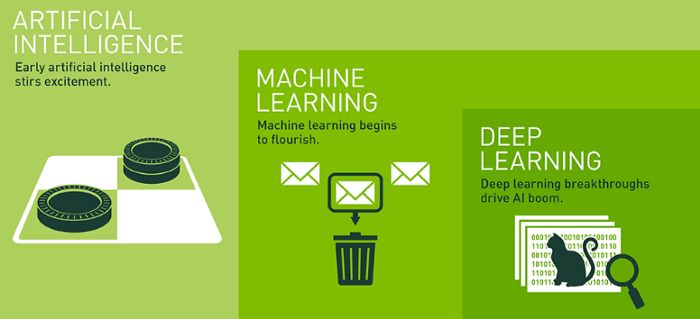

Artificial Intelligence, Machine Learning and Deep Learning Basics

by

August 26th, 2020

I am Jack Ryan, the Marketer & Coder. We share some stories about free smtp servers and programming.

About Author

I am Jack Ryan, the Marketer & Coder. We share some stories about free smtp servers and programming.