238 reads

AI Alignment: What Open Source, for LLMs Safety, Ethics and Governance, Is Necessary?

by

September 18th, 2024

Audio Presented by

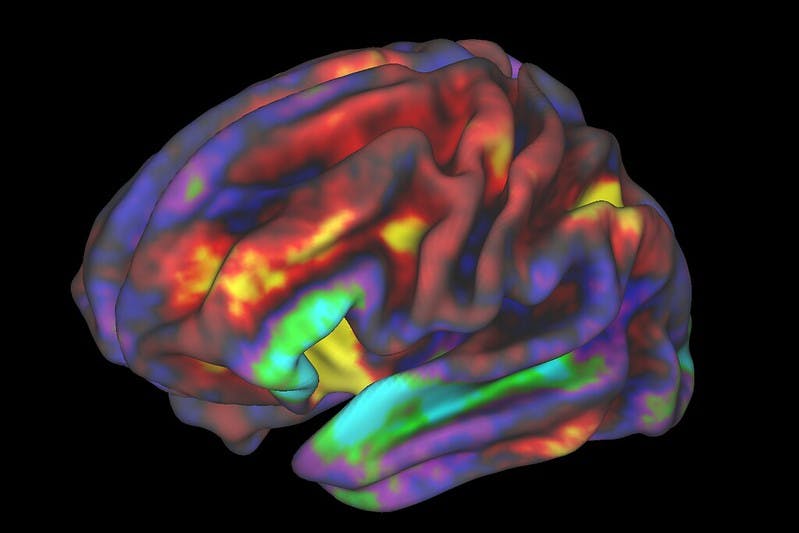

Conceptual Biomarkers and Theoretical Biological Factors for Psychiatric and Intelligence Nosology https://tinyurl.com/

About Author

Conceptual Biomarkers and Theoretical Biological Factors for Psychiatric and Intelligence Nosology https://tinyurl.com/