3,673 reads

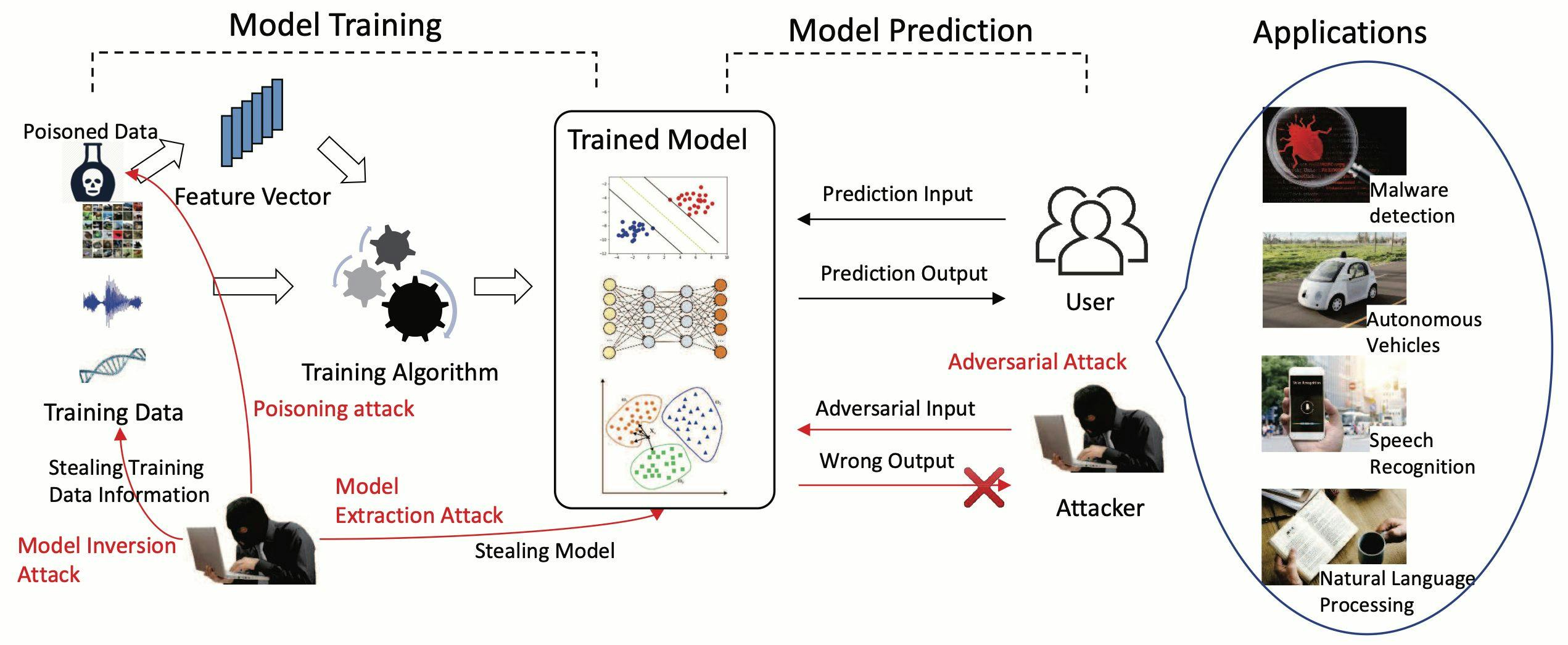

Adversarial Machine Learning: A Beginner’s Guide to Adversarial Attacks and Defenses

by

January 9th, 2022

Audio Presented by

Security researcher that learns and manifests new concepts and skills continuously. Speaker at several sec conferences.

About Author

Security researcher that learns and manifests new concepts and skills continuously. Speaker at several sec conferences.