179 reads

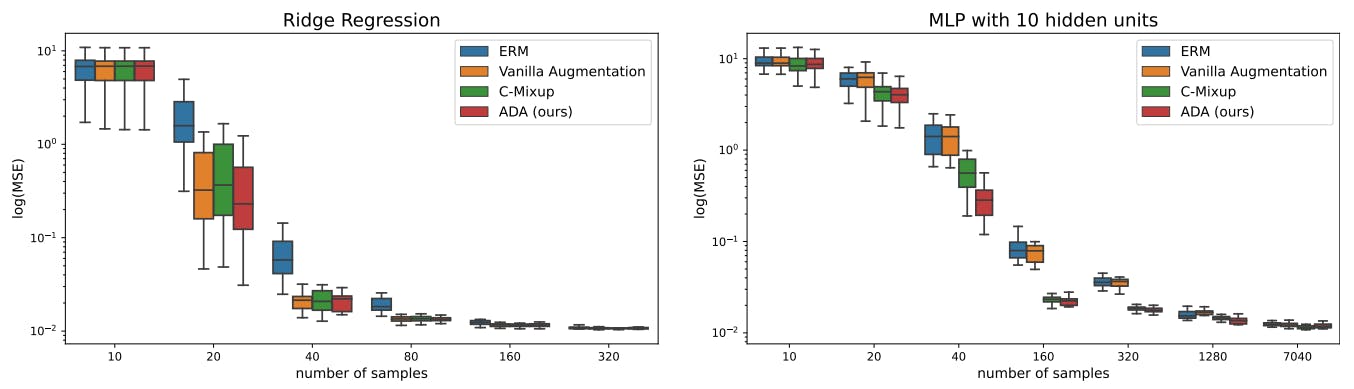

ADA vs C-Mixup: Performance on California and Boston Housing Datasets

by

November 14th, 2024

Audio Presented by

Anchoring provides a steady start, grounding decisions and perspectives in clarity and confidence.

Story's Credibility

About Author

Anchoring provides a steady start, grounding decisions and perspectives in clarity and confidence.