597 reads

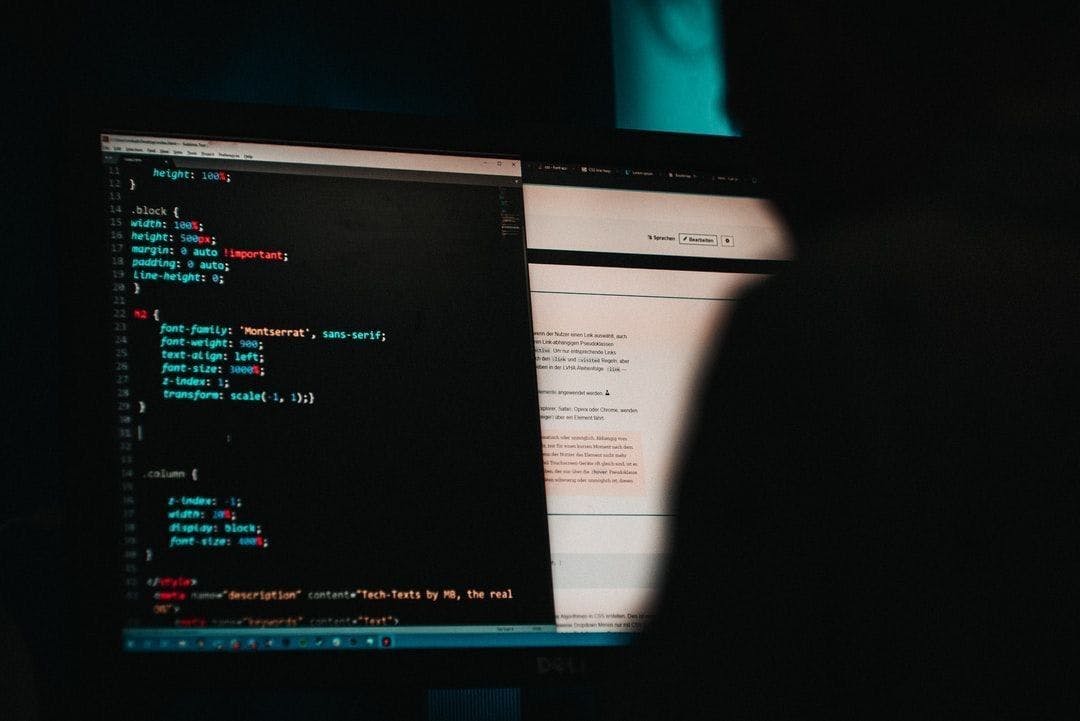

A Guide To Web Security Testing: Part 1 - Mapping Contents

by

January 11th, 2022

Audio Presented by

Hello, We write tutorials on Cybersecurity and Bug Bounty on our website and HackerNoon. We

About Author

Hello, We write tutorials on Cybersecurity and Bug Bounty on our website and HackerNoon. We