1,287 reads

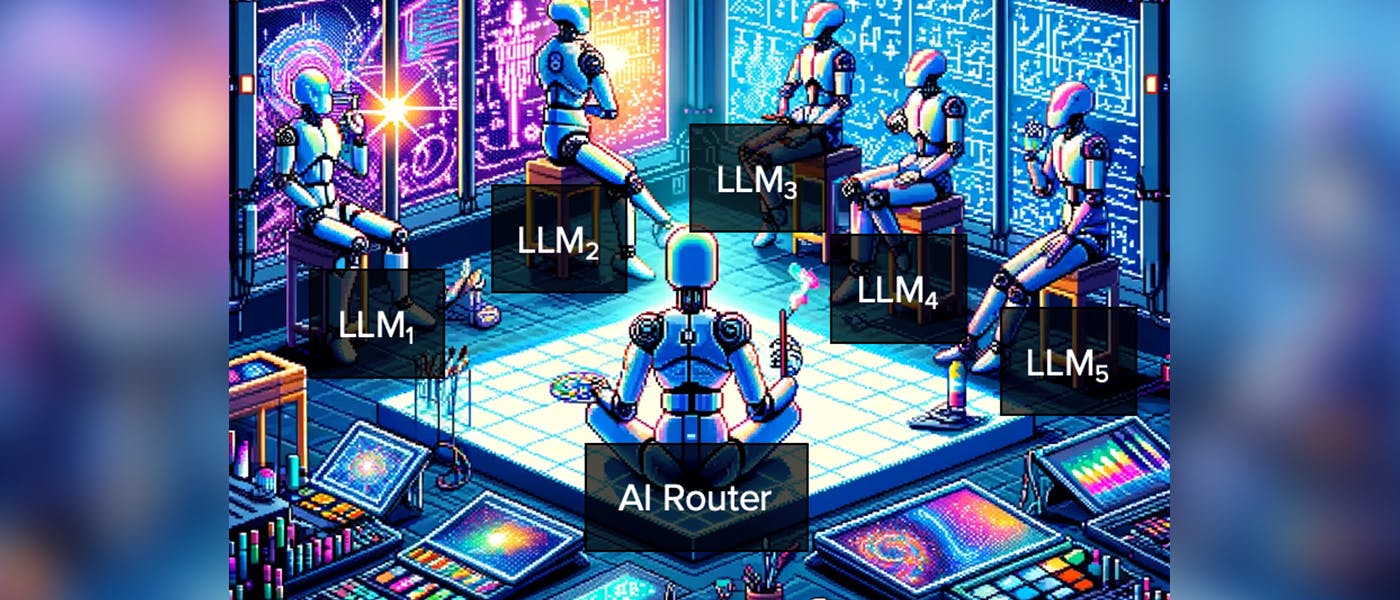

From Chatbots to AI Routing: An Essay

by

March 27th, 2024

Audio Presented by

Backend Engineer, Growth Hacker, ex-CTO. Experienced in digital, food, data science. Disrupting the world with tech 🚀

About Author

Backend Engineer, Growth Hacker, ex-CTO. Experienced in digital, food, data science. Disrupting the world with tech 🚀