537 reads

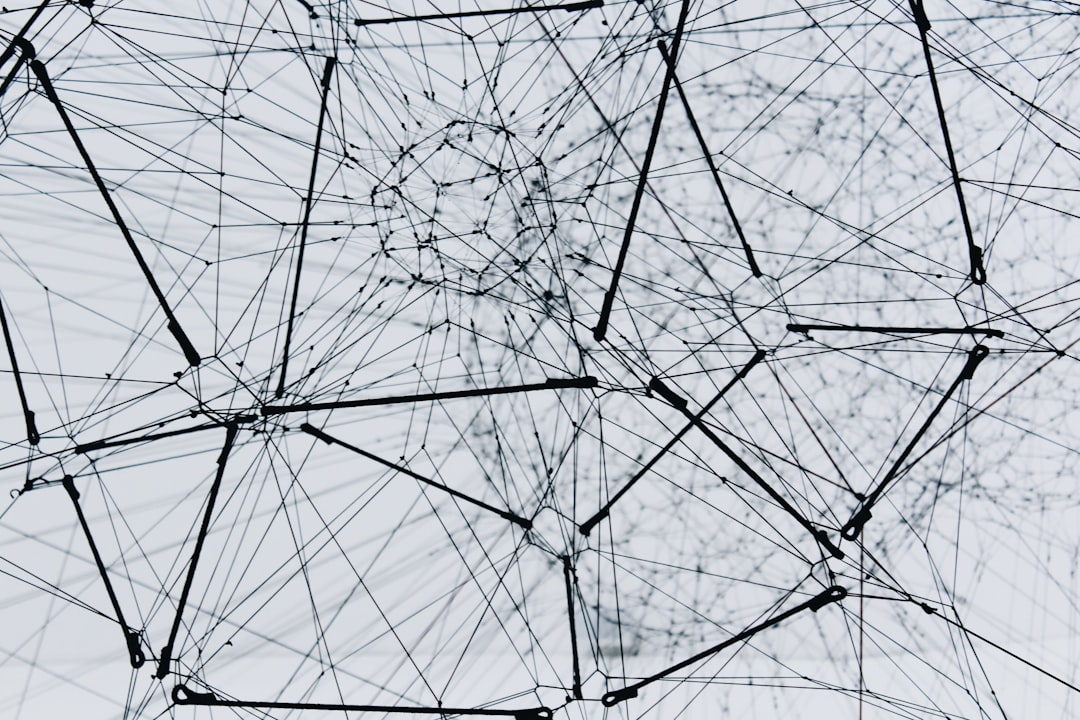

Why Microservices Suck At Machine Learning...and What You Can Do About It

by

May 7th, 2020

Simba Khadder is an ex-Googler and the creator of StreamSQL.io, a Feature Store for Machine Learning

About Author

Simba Khadder is an ex-Googler and the creator of StreamSQL.io, a Feature Store for Machine Learning