1,095 reads

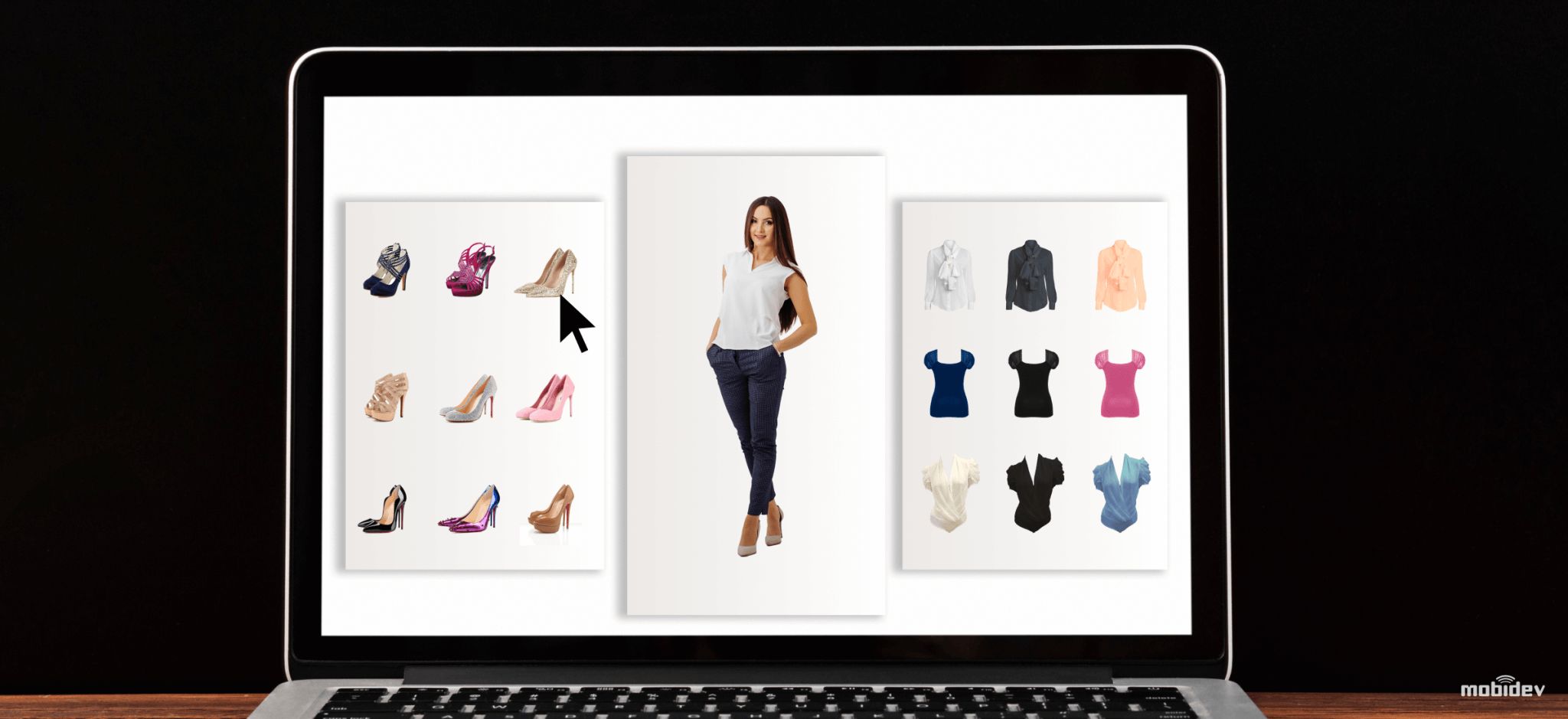

Virtual Fitting Room Technologies To Boost Retail Sales in a Post COVID-19 World

by

October 16th, 2020

Audio Presented by

Trusted software development company since 2009. Custom DS/ML, AR, IoT solutions https://mobidev.biz

About Author

Trusted software development company since 2009. Custom DS/ML, AR, IoT solutions https://mobidev.biz