2,783 reads

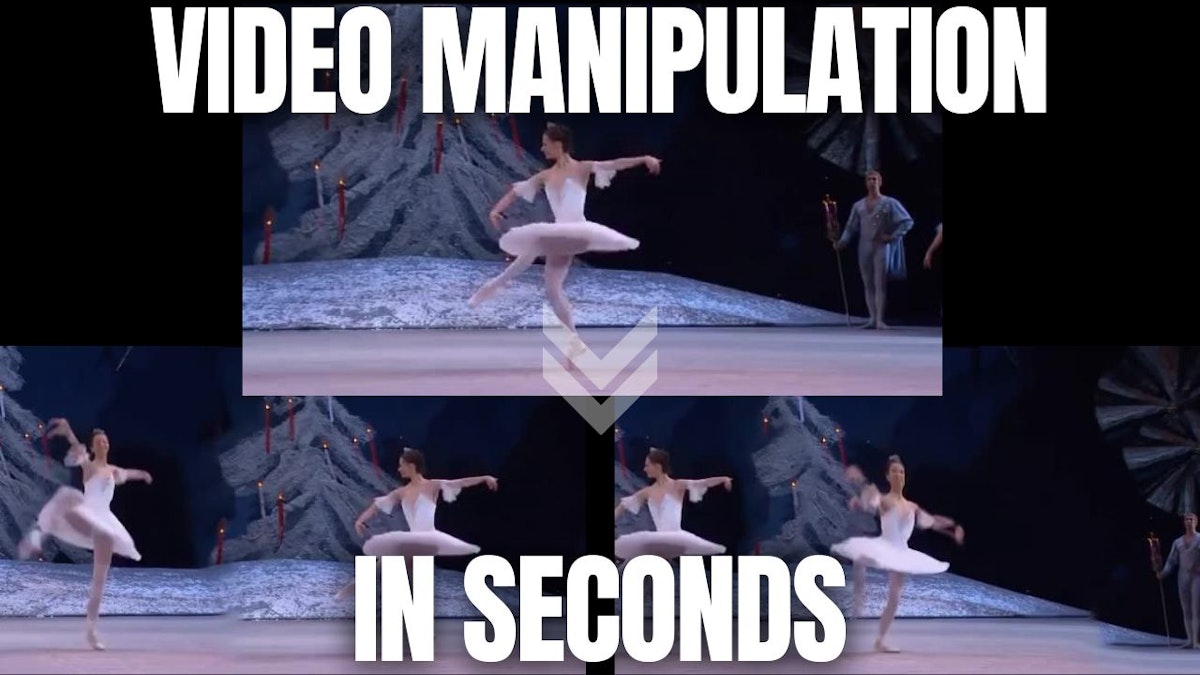

This AI Performs Seamless Video Manipulation Without Deep Learning or Datasets

Too Long; Didn't Read

Have you ever wanted to edit a video to remove or add someone, change the background, make it last a bit longer, or change the resolution to fit a specific aspect ratio without compressing or stretching it? For those of you who already ran advertisement campaigns, you certainly wanted to have variations of your videos for AB testing and see what works best. Well, this new research by Niv Haim et al. can help you do all of the about in a single video and in HD! Indeed, using a simple video, you can perform any tasks I just mentioned in seconds or a few minutes for high-quality videos. You can basically use it for any video manipulation or video generation application you have in mind. It even outperforms GANs in all ways and doesn’t use any deep learning fancy research nor requires a huge and impractical dataset! And the best thing is that this technique is scalable to high-resolution videosI explain Artificial Intelligence terms and news to non-experts.

About @whatsai

LEARN MORE ABOUT @WHATSAI'S

EXPERTISE AND PLACE ON THE INTERNET.

EXPERTISE AND PLACE ON THE INTERNET.

L O A D I N G

. . . comments & more!

. . . comments & more!