482 reads

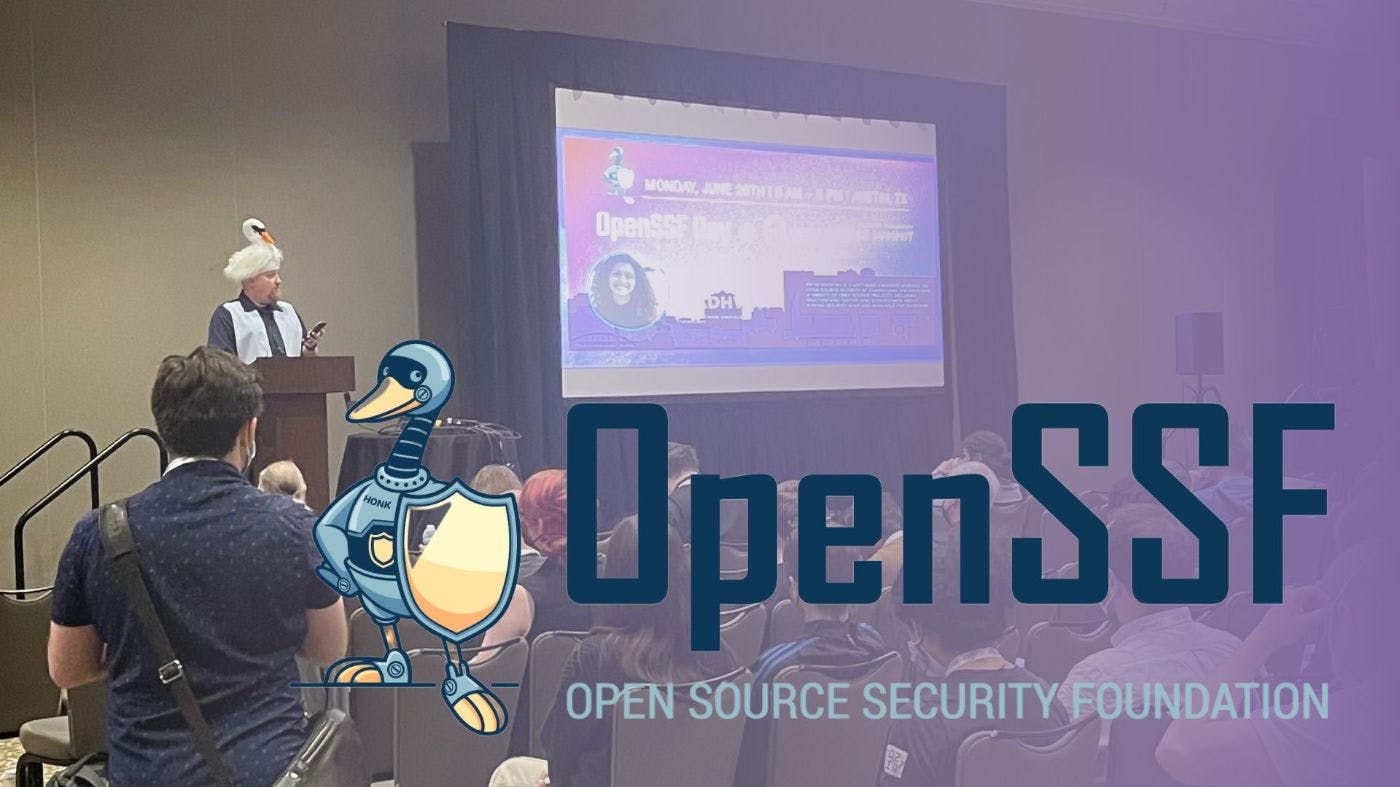

Quick Guide to the Open Source Security Summit

by

June 29th, 2022

Audio Presented by

Focused on the open source software supply chain to build a better digital future for all of us.

About Author

Focused on the open source software supply chain to build a better digital future for all of us.

Comments

TOPICS

Related Stories

Cloud Security 101

Nov 19, 2021

Cloud Security 101

Nov 19, 2021