116 reads

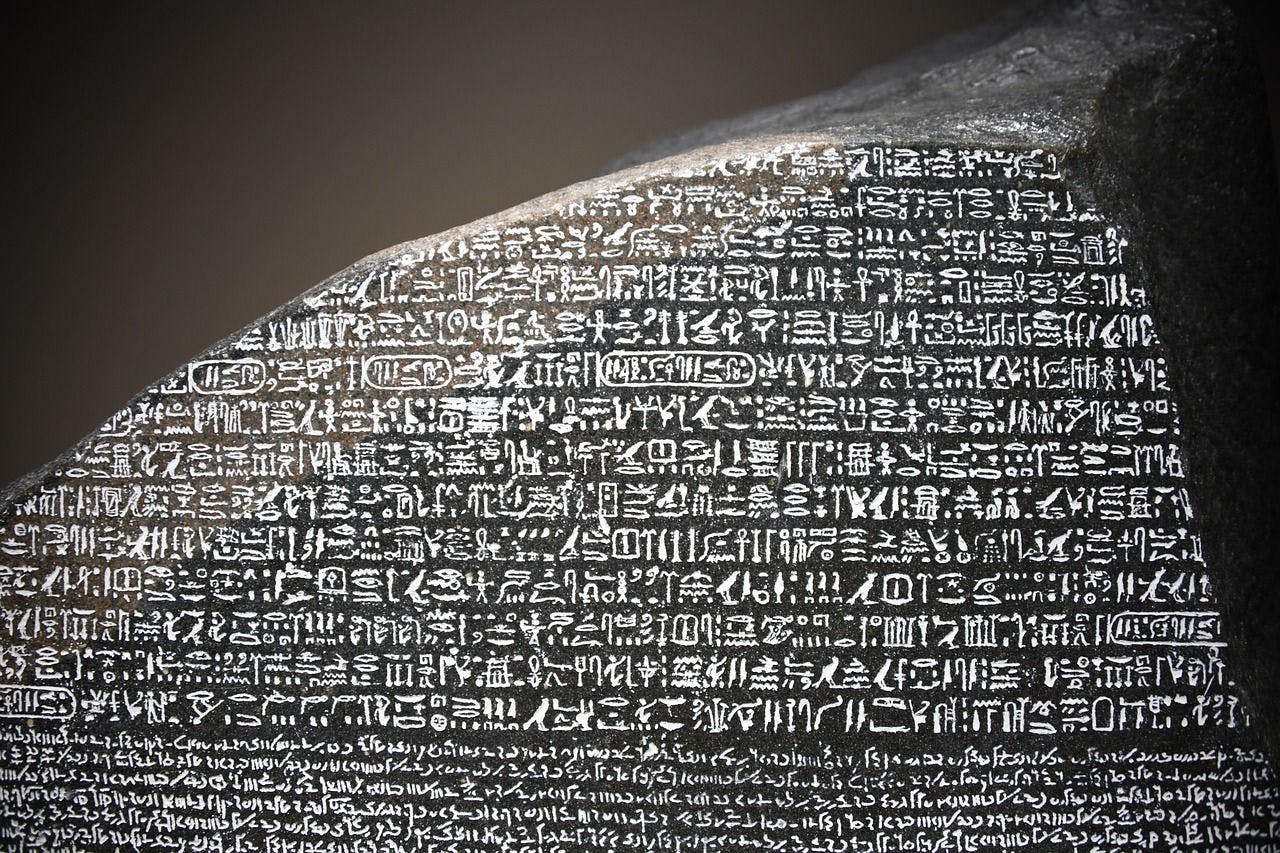

Rosetta Stone and machine-interpretability: Wikidata and a modeling paradigm

by

March 8th, 2024

Audio Presented by

The #1 Publication focused solely on Interoperability. Publishing how well a system works or doesn't w/ another system.

About Author

The #1 Publication focused solely on Interoperability. Publishing how well a system works or doesn't w/ another system.