131 reads

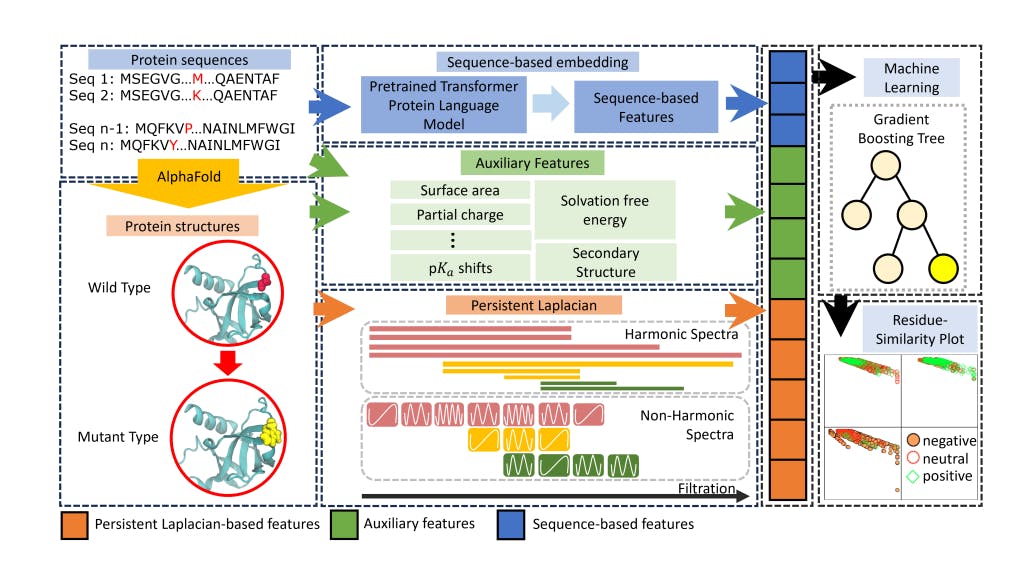

Persistent Laplacian and Pre-Trained Transformer for Protein Solubility Changes Upon Mutation

by

February 16th, 2024

Audio Presented by

Mutation: process of changing in form or nature. We publish the best academic journals & first hand accounts of Mutation

About Author

Mutation: process of changing in form or nature. We publish the best academic journals & first hand accounts of Mutation