410 reads

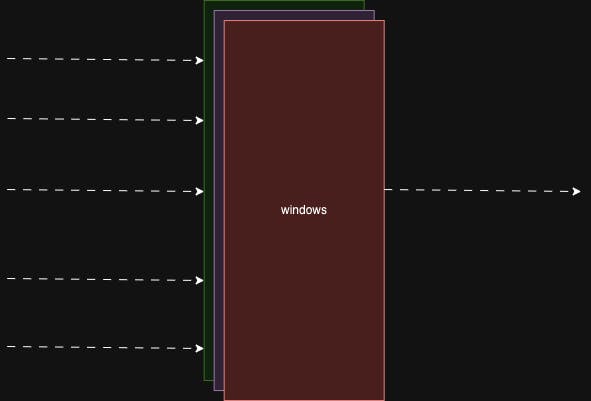

Stream Processing - Windows

by

November 8th, 2024

Audio Presented by

Engineering Leader & Mentor @ Cisco. Former Architect @ PayPal. Technology Evangelist @ HackerNoon. Redefining scalable

Story's Credibility

About Author

Engineering Leader & Mentor @ Cisco. Former Architect @ PayPal. Technology Evangelist @ HackerNoon. Redefining scalable