572 reads

New SOTA Image Captioning: ClipCap

Too Long; Didn't Read

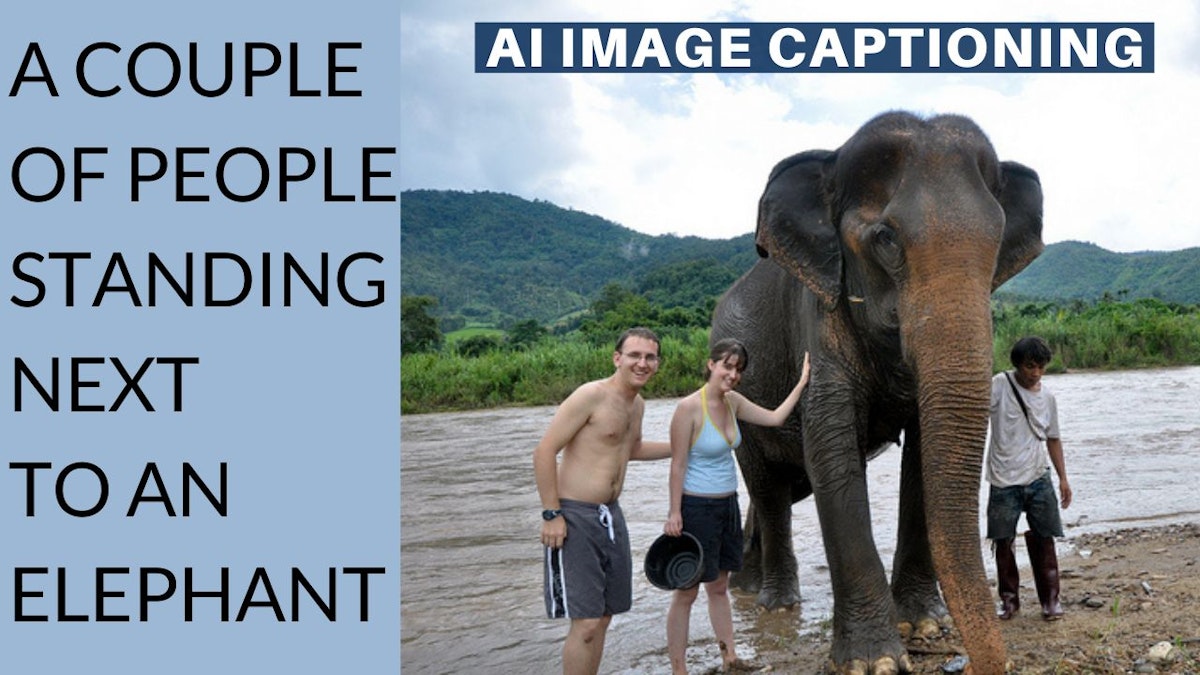

DALL-E beat all previous attempts to generate images from text input using CLIP, a model that links images with text as a guide. A very similar task called image captioning may sound really simple but is, in fact, just as complex. It is the ability of a machine to generate a natural description of an image. It’s easy to simply tag the objects you see in the image but it is quite another challenge to understand what's happening in a single 2-dimensional picture, and this new model does it extremely well!I explain Artificial Intelligence terms and news to non-experts.

About @whatsai

LEARN MORE ABOUT @WHATSAI'S

EXPERTISE AND PLACE ON THE INTERNET.

EXPERTISE AND PLACE ON THE INTERNET.

L O A D I N G

. . . comments & more!

. . . comments & more!