4,901 reads

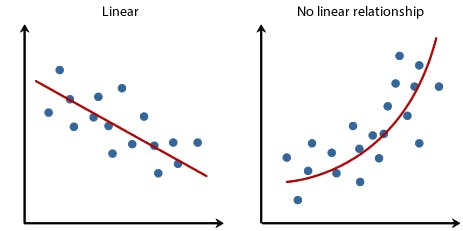

Linear Regression and its Mathematical implementation

by

August 9th, 2019

Audio Presented by

Enjoys Reading, writes amazing blogs, and can talk about Machine Learning for hours.

About Author

Enjoys Reading, writes amazing blogs, and can talk about Machine Learning for hours.