1,806 reads

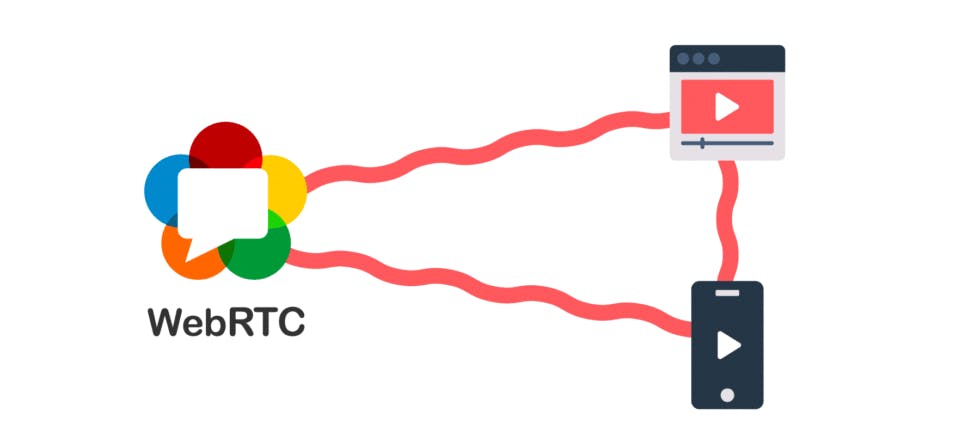

Learning WebRTC in Practice: Best Tools and Demos

by

March 18th, 2024

Audio Presented by

Software Engineer enjoying working with any platform. https://www.linkedin.com/in/beskrovnov/

About Author

Software Engineer enjoying working with any platform. https://www.linkedin.com/in/beskrovnov/